Secure AI agents and MCP servers before they touch your data.

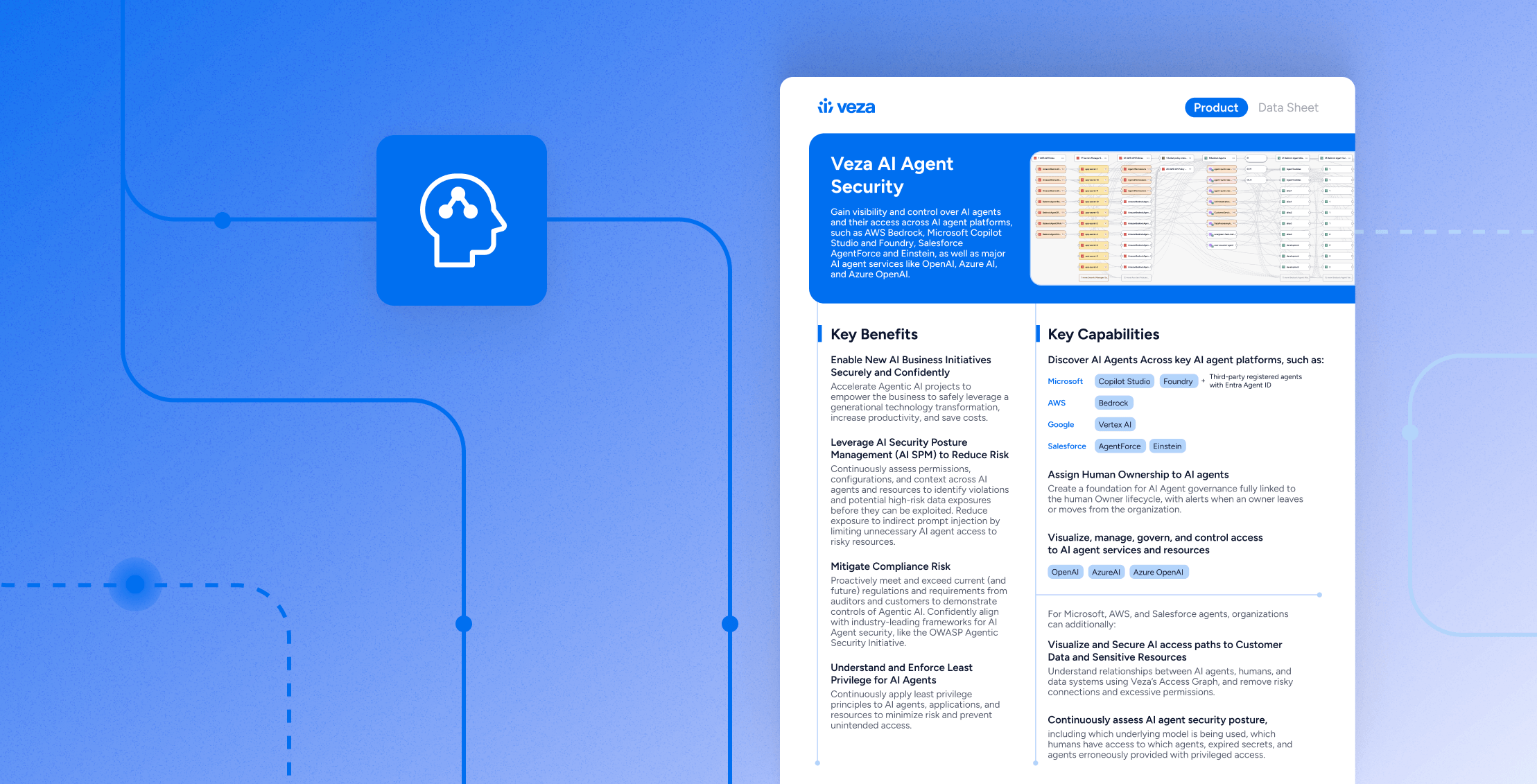

AI Agent Security gives identity and security teams visibility and control over AI agents and their access across leading AI platforms and services. Use a single access graph to understand how agents, humans, and data systems relate across AWS Bedrock, Microsoft Copilot Studio, Salesforce AgentForce and Einstein, Google Vertex AI, OpenAI, Azure AI, Azure OpenAI, and GitHub MCP servers. Govern agentic AI like the rest of your critical identity perimeter, not as a one-off experiment.

Why AI Agent Security

- Enable AI initiatives securely – Remove blind spots so security can say “yes” to agentic AI projects without guessing at risk.

- Apply AI Security Posture Management (AI SPM) – Continuously assess permissions, configuration, and context across AI agents and resources to catch high-risk access before it is exploited.

- Reduce exposure to indirect prompt injection – Limit what agents can see and do so untrusted inputs cannot quietly pivot into critical systems.

- Meet emerging AI regulations confidently – Show auditors and customers you have controls in place that align with leading frameworks such as the OWASP Agentic Security Initiative.

- Enforce least privilege for AI agents – Continuously tune access for agents, applications, and resources so they only have the permissions they actually need.

What you will discover inside

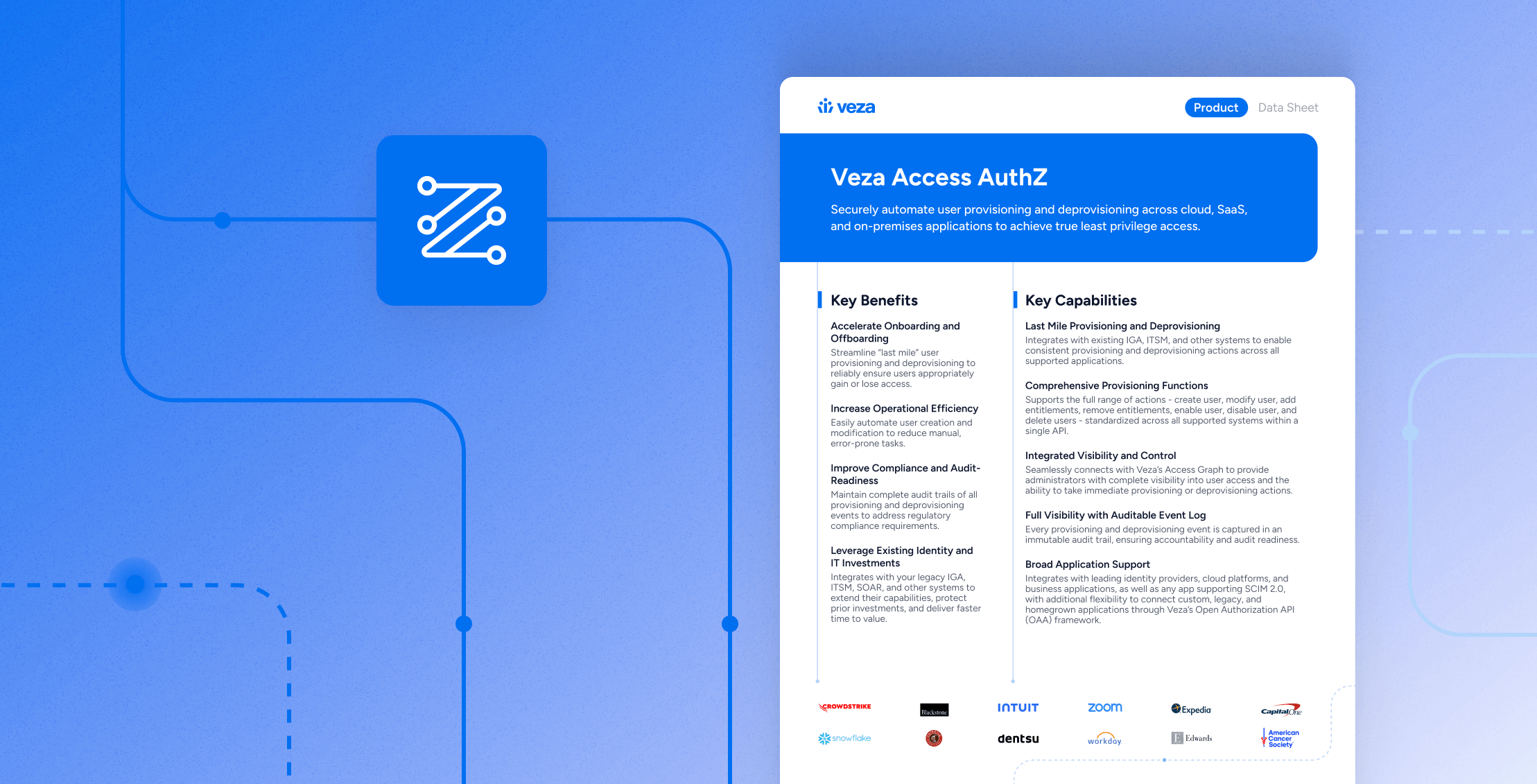

- How to discover AI agents and MCP servers across Microsoft (Copilot Studio and third-party agents with Entra Agent ID), AWS Bedrock, Salesforce AgentForce and Einstein, and Google Vertex AI, along with GitHub MCP servers.

- How to assign human ownership to AI agents, attach governance duties, and trigger alerts when owners leave or change roles.

- How to visualize and govern access from AI agents to services like OpenAI, Azure AI, and Azure OpenAI, including which models are in use and which humans can operate which agents.

- How to use Veza’s Access Graph to understand AI agent access paths to customer data and other sensitive resources, then remediate risky connections and excessive permissions.

- A legacy solution challenge versus Veza solution comparison that shows why spreadsheets and siloed inventories cannot keep up with AI agent risk.

- An extended feature list that summarizes discovery coverage, access risk detection, blast-radius analysis, ownership mapping, and reporting. Agentic AI Security Product Dat…

How it works (at a glance)

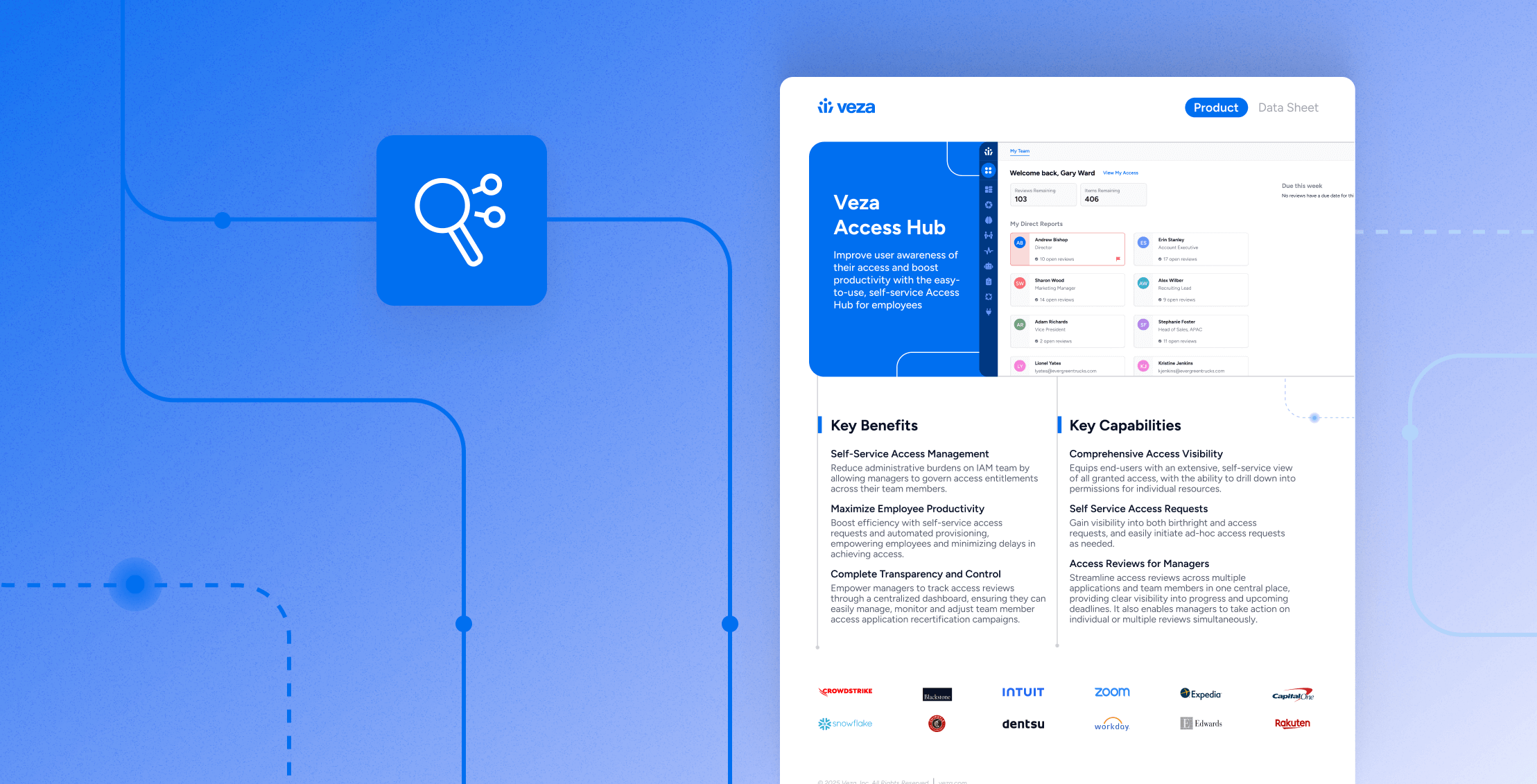

- Discover AI agents and MCP servers

Connect Veza to your AI platforms and services to automatically discover agents, MCP servers, and related non-human identities across your environment. - Attach human ownership and context

Map each agent to one or more human owners and teams so there is always a clear accountable party for access, behavior, and lifecycle decisions. - Map and evaluate access paths

Use the Access Graph to see which agents can reach which data systems and resources, what models and secrets they use, and where over-permissioning or misconfiguration creates risk. - Enforce least privilege and reduce blast radius

Run access risk detection and blast-radius analysis to right-size permissions, remove unused or risky access, and document the impact of each change across systems.

Outcomes

- Faster approval for AI projects because security and GRC teams have concrete evidence of agent behavior and access.

- Reduced risk from over-permissioned AI agents, misconfigured MCP servers, and hidden machine-to-data paths.

- Stronger alignment with AI security frameworks and regulatory expectations, backed by traceable controls and reports.

- Smaller blast radius if a prompt injection, credential leak, or model misconfiguration occurs, since agents are already constrained to least privilege.

- A single, consistent way to manage AI agents alongside human and non-human identities instead of another standalone inventory.

Who it is for

- Identity and access management (IAM) and IGA owners

- Security and identity architects responsible for AI adoption

- AI platform, MLOps, and application teams building AI agents into products and workflows

- Data, SaaS, and cloud platform owners who must protect customer and sensitive data

- GRC, risk, and audit leaders who need defensible evidence of AI agent controls

Download the datasheet

Get the full AI Agent Security data sheet to see coverage details, example access graph views, and a deeper breakdown of features and outcomes. Share it with AI platform owners, security architects, and GRC stakeholders to align on how your organization will govern agentic AI in production.