Sam’s post about the new “ChatGPT Agent” perfectly encapsulates the immense promise and the inherent risks of the new agentic era. The challenges he outlines—unpredictable actions, the potential for data leakage, and the need for careful, incremental adoption—are precisely the issues that modern identity security platforms are designed to address.

Veza provides the foundational visibility and control necessary to manage the risks of powerful, autonomous AI agents. Here’s how Veza’s platform directly addresses the concerns raised:

1. Demystifying the “Minimum Access Required” Problem

Sam’s core recommendation is to “give agents the minimum access required to complete a task.” This is the principle of least privilege, and it’s the most effective strategy for mitigating the risks of unpredictable AI agents. However, enforcing this principle is nearly impossible without deep visibility.

The Challenge: An AI agent, like an application or a human, is given access through credentials (like API keys or tokens) that are tied to permissions across dozens of systems. It’s incredibly difficult to know what the agent’s effective permissions are, how agents talk to other agents, how run time access permissions are handled for privilege actions, etc. Does giving it access to your email also inadvertently give it access to delete files in your cloud drive? Existing identity tools were not built for this era for Agentic AI use cases:

- What agents do we have in our environments?

- What agents have access to?

- What human access has been shared with Agents?

- What are the agents doing with the access permissions they have?

- When these agents collaborate, what access permissions do they have collectively?

How Veza Access AI Helps: Veza’s Authorization Graph solves this by mapping ALL identities—human, machines, AI agents —to every permission on every resource across all connected systems. Instead of guessing, security teams can ask precise questions to Veza Access AI and get definitive answers. For example:

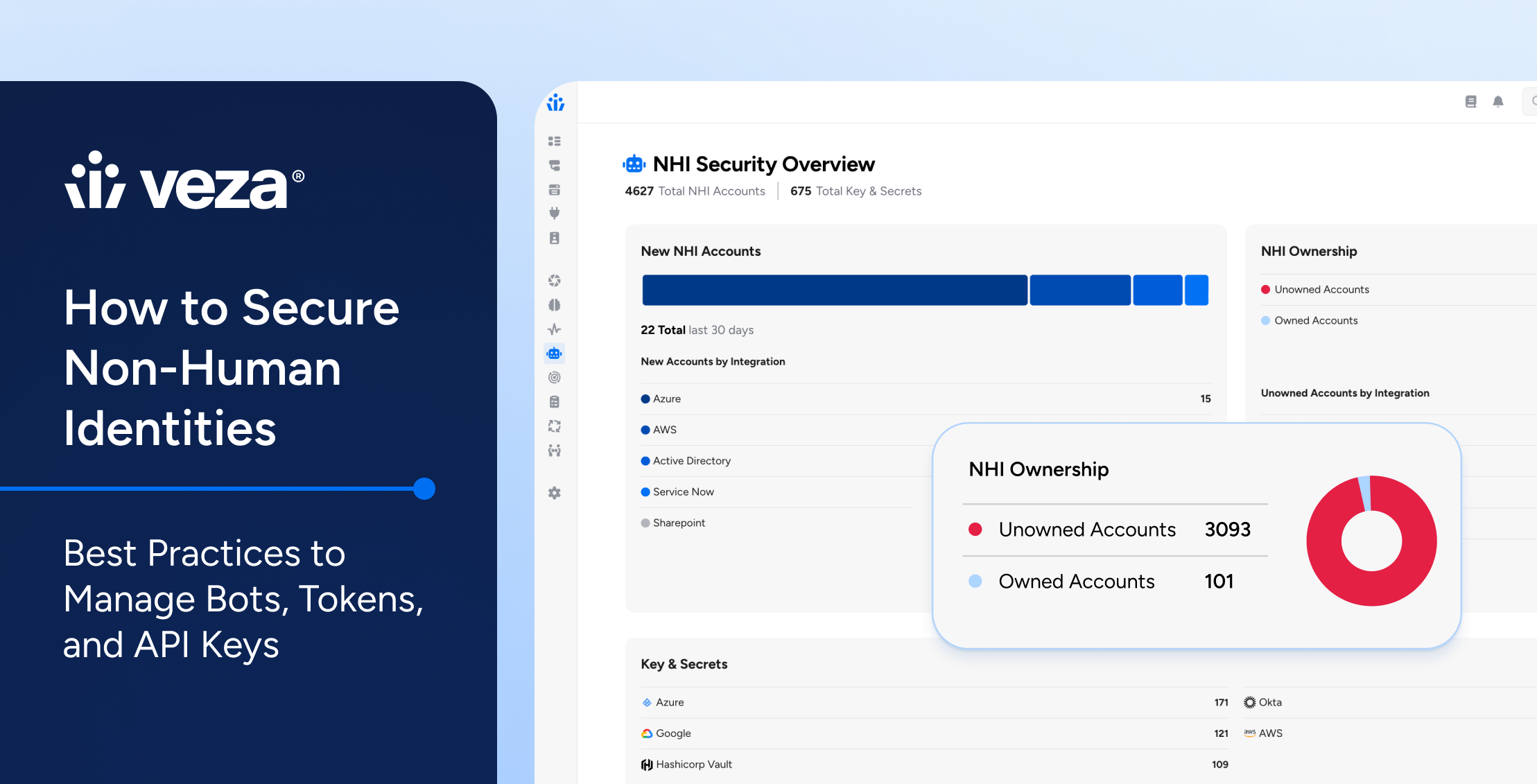

- Veza can discover all Non-Human Identities (NHIs), including the service accounts and tokens that power these AI agents, and show exactly what they can access.

- It translates complex, system-specific permissions into simple, human-readable terms like “Create, Read, Update, Delete,” so you can understand an agent’s true capabilities at a glance.

- This allows organizations to proactively find and remove excessive privileges before they are exploited, ensuring the agent has only the access it truly needs for its designated task.

2. Containing the “Blast Radius” of a Compromise

Sam rightly warns that “bad actors may try to ‘trick’ users’ AI agents into giving private information they shouldn’t and take actions they shouldn’t.” This is the scenario of a compromised agent. When this happens, the critical question is: what damage can it do?

The Challenge: Without a unified view, determining the potential “blast radius” of a compromised agent is a slow, manual process of digging through logs and settings in every individual application.

How Veza Access AI Helps: Veza provides instant incident response intelligence. If an agent’s credential is leaked or it begins acting maliciously, the Authorization Graph can immediately reveal:

- Every single folder, database, application, and system the agent has access to.

- The specific permissions (read, write, delete) it has on each of those resources.

- Remove the offending permissions from the agent to contain the damage.

- This transforms incident response from a multi-day investigation into a real-time impact analysis, enabling teams to rapidly contain the threat.

3. Enabling the “Co-Evolution” of Technology and Security

Sam also mentioned that new capabilities, technology, and risk mitigation must “co-evolve.” This requires security tools that are as intelligent and adaptable as the AI they are meant to govern.

The Challenge: Traditional security tools are static and rule-based, making them ill-equipped to manage the dynamic, non-deterministic behavior of AI agents.

How Veza Access AI Helps: Veza uses “AI to govern AI”.

- Access Intelligence: The platform continuously analyzes access patterns to identify risks like dormant accounts or toxic permission combinations, allowing for a proactive rather than reactive security posture.

- Natural Language Queries: Veza’s Access AI allows security teams to ask plain-English questions about their security posture, such as, “Show me all non-human identities with access to production data” or “Alert me if any AI agent is granted administrator privileges”. This provides a “security co-pilot” that makes sophisticated governance intuitive and accessible.

- Future-Proofing with Extensibility: As new AI platforms and agents emerge, Veza’s Open Authorization API (OAA) allows organizations to integrate any custom or new system into the Authorization Graph, ensuring that visibility and control can keep pace with innovation.

In essence, while the creators of powerful AI agents provide warnings and high-level guidance, Veza provides the practical tools needed to enforce that guidance at scale. It gives organizations the confidence to adopt transformative technologies like the “ChatGPT Agent” by replacing uncertainty and risk with visibility and control.