Introduction

Generative AI is no longer experimental; it’s embedded in mission-critical workflows – from analyst copilots to customer-facing agents. But with innovation comes governance concerns:

- Who can create or delete an AI assistant (i.e. custom agent)?

- Are ex-contractors or orphaned identities still active in OpenAI projects?

- Can you demonstrate least privilege and compliance alignment to the CISO?

These aren’t theoretical issues. Non-human identities now frequently outnumber human accounts by factors of 40:1 or more in enterprise environments. CrowdStrike has flagged unmanaged service accounts as a key identity attack vector. IT Pro reports that many organizations have no systematic way to track or govern non-human accounts. HashiCorp emphasizes vaulting and lifecycle controls, yet notes adoption gaps. Even Google Cloud warns that failing to manage service accounts is one of the most common security mistakes in AI adoption. The Cloud Security Alliance calls this the “blind spot of AI governance,” while Cybersecurity Tribe frames it as the defining identity challenge of 2025.

At the same time, identity sprawl in AI projects magnifies the risks of over-permissioned roles. Varonis notes that applying the principle of least privilege (PoLP) is critical for AI security, since any excess permission can grant access to sensitive data or models. LegitSecurity underscores the risk of developer role sprawl and urges automation of audits. And research on model governance stresses that “who can access or modify models” must be a core governance concern in enterprise AI (arXiv).

With that backdrop, let’s examine how three enterprise roles confront these challenges – and how modern identity governance tools like Veza help close the gap.

Perspective 1: Security Engineering Lead

The Situation

OpenAI pilots are underway across product, marketing, and data teams. Each group spins up projects with ad hoc roles and decentralized owners. Membership tracking is inconsistent.

The Challenge

Security leaders can’t confidently answer:

- Who can create or delete assistants?

- Do any ex-engineers or contractors still have elevated access?

- How do we prove least privilege for compliance?

Why It Matters

AI’s automation power magnifies risk when roles are over-assigned. Without proper governance, permissions can be unintentionally broad—leading to data breaches.

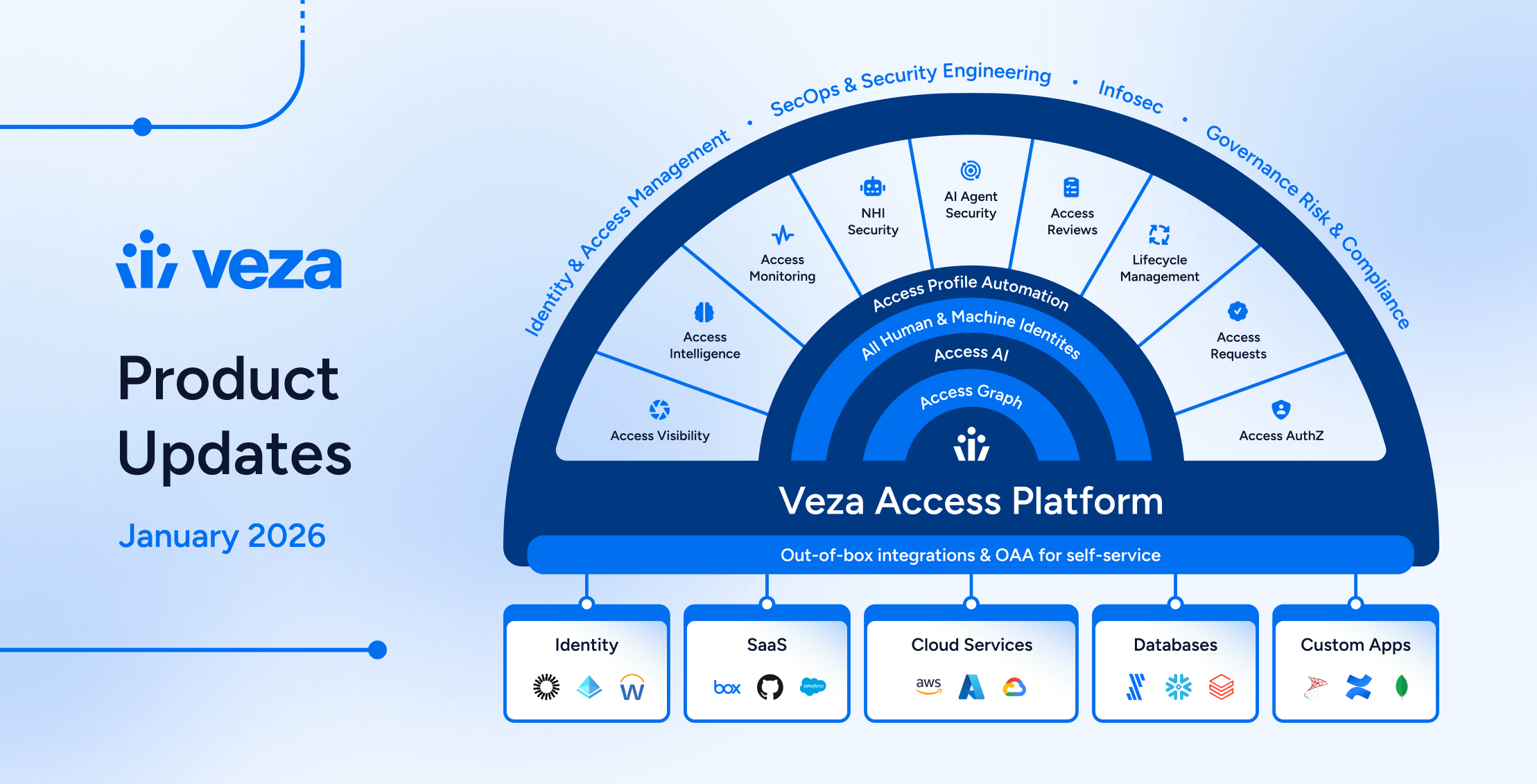

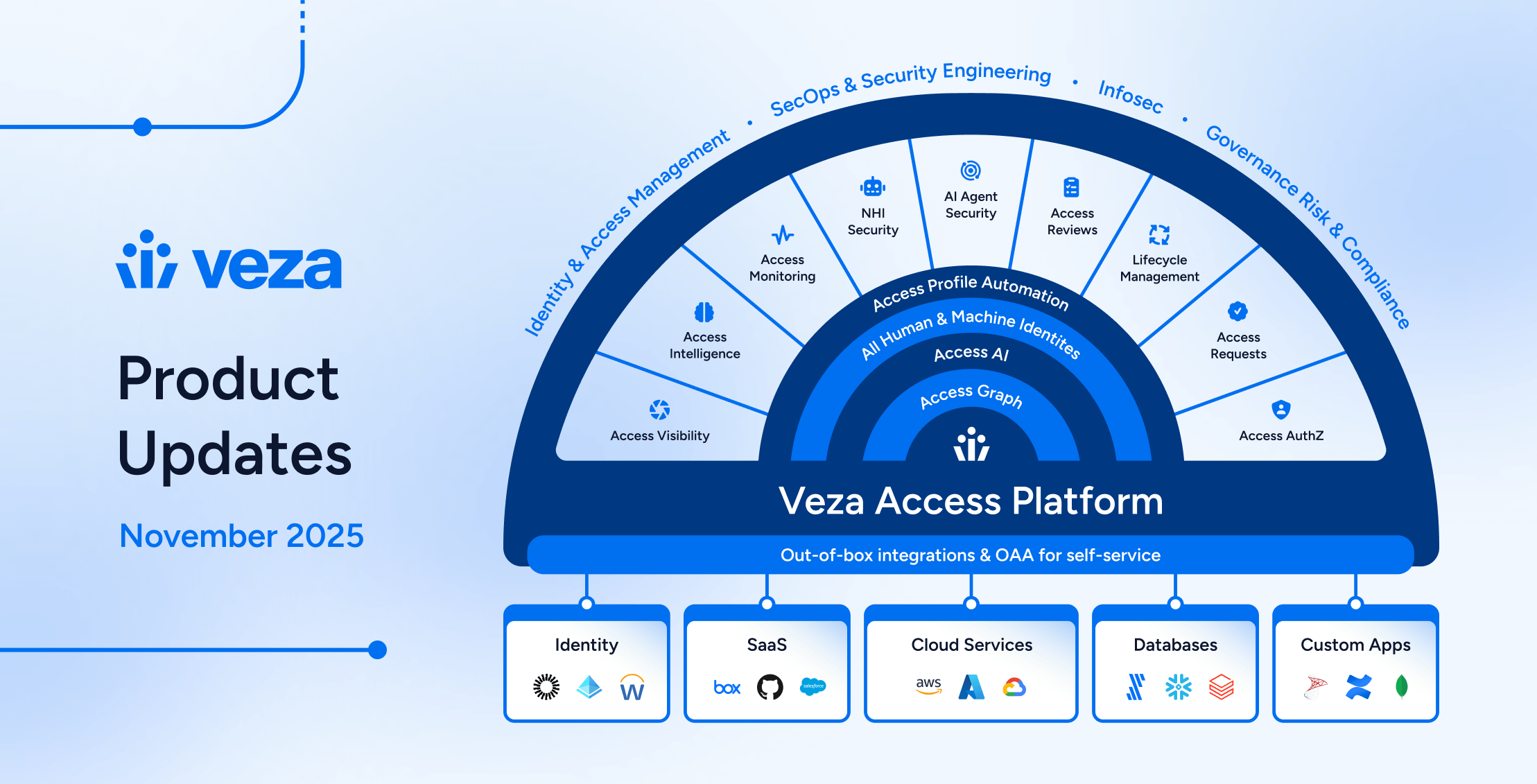

Modern Governance Approach

Introducing a tool such as Veza, which integrates OpenAI’s Members and Roles into an enterprise Access Graph, allows teams to see “who can do what.” This visibility enables continuous enforcement of least privilege, identification of stale access, and audit-proof governance.

Perspective 2: Principal Data Platform Engineer

The Situation

A principal engineer supports multiple OpenAI rollouts for various business units. Developers often request “just give me admin” to accelerate prototyping of agents or embedding LLMs into applications.

The Challenge

- Excessive permission grants escalate downstream risk.

- Audits become painful: When asked, “Who can delete assistants?”, the engineer scrambles through manual exports and inspections.

Why It Matters

Unchecked permission creep, especially in AI environments, accelerates exposure to sensitive data or model misuse.

Modern Governance Approach

Veza visualizes member → role → action relationships, helping identify and remediate over-permissioned accounts. Automated access reviews shift audits from firefighting to a structured, continuous process.

Perspective 3: Head of Data Platform (Retail)

The Situation

A global retailer has deployed OpenAI-powered copilots. Their data stack spans Snowflake, Databricks, and OpenAI – using shared identities. Security and auditors request proof of control over these environments, especially under SOX and PCI DSS.

The Challenge

- Native OpenAI tooling lacks governance visibility.

- Auditor demands for identity control and compliance are mounting.

- Service accounts and automation bots (ETL scripts) tied to OpenAI are largely zero-touch with no oversight.

Why It Matters

Non-human identities (NHIs) – bots, service accounts, tokens, etc. – pose one of the most underestimated risks in modern enterprises, with many cases of exposure leading to automation-based breaches.

Modern Governance Approach

With Veza, human and non-human identities are aligned under a governance lens. Least-privilege policies are applied, and compliance evidence is generated – covering frameworks such as SOX, PCI DSS, NIST 800-53, and ISO 27001.

Why This Matters

Across security, engineering, and platform roles, the governance gap is stark: OpenAI enables innovation effortlessly, but without governance, it amplifies risk. Legacy tools don’t offer the cross-OS-level visibility or control needed.

To mitigate these risks, enterprises need a modern identity governance layer that:

- Maps identity permissions clearly

- Automates access reviews

- Enforces least privilege continuously

- Provides audit-ready evidence across regulatory frameworks

Learn More

- Integration Documentation: Deep dive into Veza’s OpenAI integration architecture and supported entities. Veza for OpenAI Solution Brief, and learn more about Modern Identity Security for OpenAI Members and Roles

- On-Demand Webinar: “Securing Non-human Identities in the Enterprise with HashiCorp Vault and Veza” – explore NHI governance strategies.

- Request a Demo: See Veza enforce AI access governance live. Request a Demo