This article was written by Maohua Lu, Shanmukh Sista, and Tarun Thakur

The Changing Face of AI and Access

Artificial intelligence has evolved dramatically over the past few years. Once limited to narrow tasks, AI systems can now function more autonomously, often referred to as “Agentic AI.” Instead of just writing snippets of code or summarizing documents, these AI agents can actually log into data sources or SaaS applications, generate or modify records, and even trigger complex workflows. For an enterprise hoping to boost efficiency, the potential is huge. Yet this same autonomy introduces serious questions about how to control what data an AI agent can access, how it uses that data and information, and what might happen if its identity or credentials are compromised.

Historically, identity and access management (IAM) solutions have focused on human users. Employees or contractors belonging to a directory service, would log in via single sign-on, pass multi-factor authentication, and be granted roles or privileges – all through group management. With AI, however, these AI assistants and AI agents (“users”) might not have a phone for MFA or a standard user profile in your identity provider. They may be ephemeral service accounts whose credentials often slip through the cracks. When that happens, an AI agent can accumulate privileges across different systems, effectively bypassing the careful role structures you put in place for enterprise systems. Understanding this shift—and ensuring it does not turn into a security liability—requires a new, identity-centric approach that explicitly accounts for these modern AI agents .

Why MCP is quickly becoming a de facto standard for AI apps to connect and access data

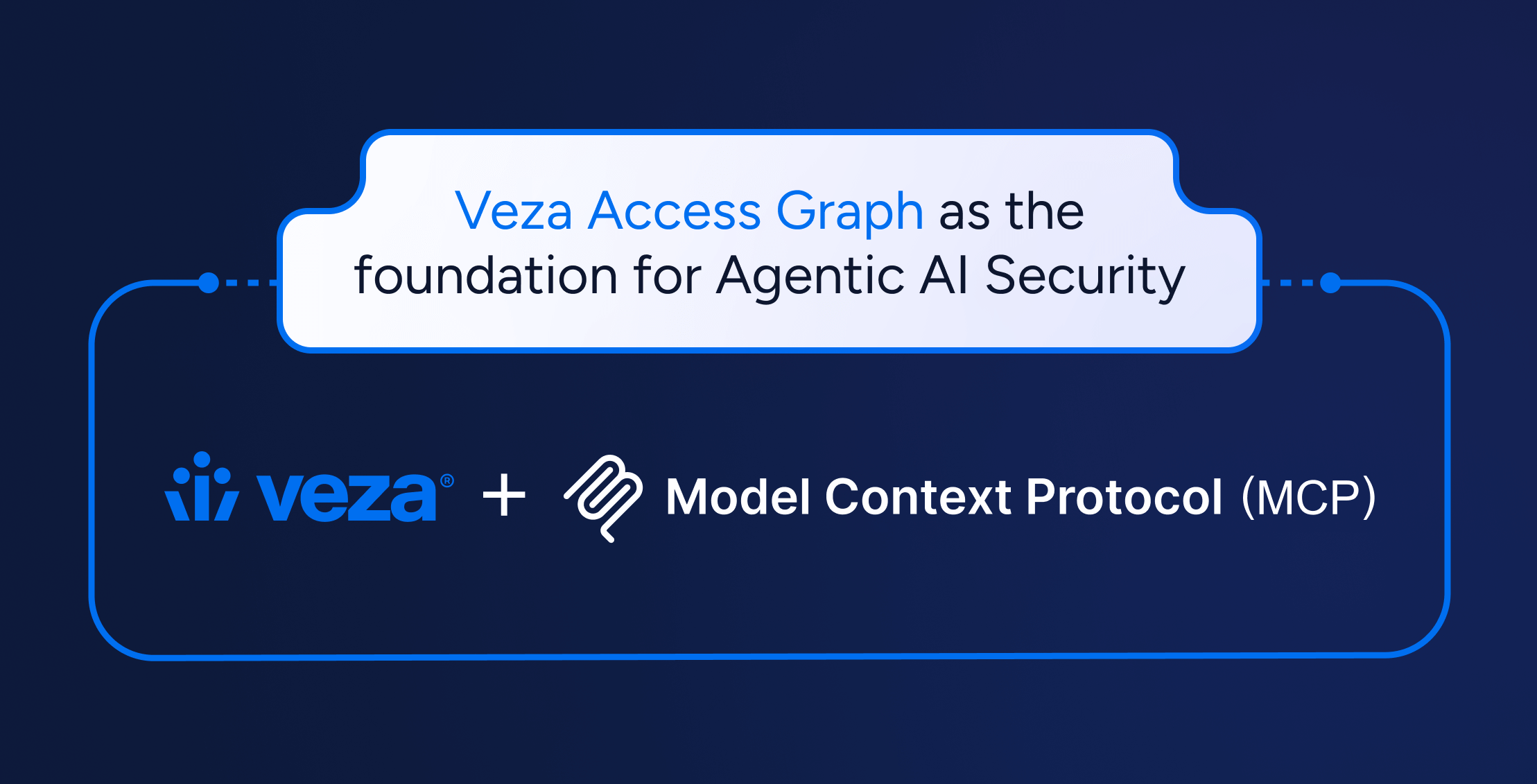

As organizations race to let Gen AI and Agentic AI do more across their data sources—ranging from CRMs and code repositories to HR systems—the complexity of building custom integrations has ballooned. Imagine writing a separate plugin for every single AI-to-database or AI-to-application connection. That approach quickly becomes unmanageable. Model Context Protocol (MCP), an open standard (originally developed by Anthropic, and now adopted by Open.AI) designed to unify how AI assistants discover resources, read or write data, and even call “tools” on remote systems.

MCP has quickly gained traction as developers rush to standardize AI integrations and avoid duplicative “adapter” code. Teams large and small are now experimenting with MCP servers that wrap everything from internal databases to SaaS APIs, while several AI platforms are actively building native MCP support. Although debates persist over its complexity, MCP’s promise of unifying AI and data under a shared protocol has encouraged many organizations to see it as a crucial stepping stone to the next wave of autonomous, integrated AI applications.

By embracing the Model Context Protocol, enterprises can unify data sources and tools under a single interface that is AI-aware. Rather than building a tangle of ad-hoc integrations for each service, MCP standardizes how your UI components expose data (as ‘resources’) and actions (as ‘tools’) to any LLM-based workflow. This means you can seamlessly give your AI assistant the ability to retrieve database records, call custom APIs, or run analytics jobs, all orchestrated with predictable schemas and real-time permission checks. Whether you’re generating compliance summaries or surfacing real-time risk metrics, MCP ensures every part of your UI can be ‘AI-enabled’ with minimal overhead—empowering teams to focus on high-level insights instead of boilerplate integration code.

From a technical standpoint, MCP can be viewed as a straightforward client-server design, in which each “MCP client” is typically an AI assistant or language model that initiates requests, and each “MCP server” is a data source, tool, or service that speaks a shared, JSON-RPC-based dialect. The client sends commands like “list resources,” “read document,” or “invoke tool,” and the server responds with structured data on what it can do or what it found. By formalizing these exchanges in a uniform schema, MCP removes the need for custom code or ad hoc integrations. Developers simply spin up an MCP server on top of an existing system—be it a database, SaaS app, or file store—and any AI that supports MCP gains immediate awareness of that system’s capabilities. With this standardized approach, organizations can introduce new data sources or retire old ones without endlessly rewriting plugins, though they still need robust identity controls to ensure that not every AI agent can call every server without proper access controls.

The Security & Access Risks of a “Agentic AI” World

Standards like MCP make it easier for AI to move between systems, gather context, and trigger operations. Yet that also creates new forms of risks. Below are some of the most common security and access concerns organizations face when modern AI agents begin orchestrating multiple data sources.

“Permissions Creep” in AI Agents

When you have a single AI assistant that can log into Google Drive, read Slack channels, query an internal database, and call a JIRA API, there is a risk of scope creep—where an AI agent ends up with broader privileges than intended. If you cannot see or control what the AI is truly allowed to do, your data could inadvertently leak or be used in unauthorized ways.

Identity Masquerading

Traditionally, identity-based security is about mapping real human accounts (e.g., “Alice in engineering”) to resources (SaaS, databases, cloud systems, on-premises systems, etc.). But AI “agents” are not exactly employees. They often act on behalf of multiple users, or they might be a system identity that belongs to a service. You need robust identity management to differentiate these “AI identities” from real human users, or to scope them properly with ephemeral tokens. A username is not an identity. Identity is rooted in permissions and entitlements.

Invisible Overprivileged Access

A hallmark of many data breaches is that some service account has far more privileges than it needs. Now imagine an LLM agent that can request read/write permissions on multiple data sources in one session. Overprovisioning means the AI might have the “keys to the kingdom” if not carefully provisioned, managed, and locked down. Proper scoping of what the LLM agent can do for each resource, and subscription/unsubscribing from resource updates become crucial.

Cross-System Entitlements and AI Access Drift

When an AI model has partial context from System A (say, a CRM database) and partial context from System B (say, a payroll system), it might combine them in ways you did not anticipate. This raises privacy and compliance concerns, especially if regulated or sensitive data (e.g., PII, financial records, patient info) can flow through the AI pipeline without guardrails.

From Human-Centric Identity Access Control to Agentic AI Identity Security

Despite these challenges, many organizations still treat AI-based service accounts no differently than a handful of background scripts. They might have a single privileged user that the AI always uses, or they might fail to assign an explicit “owner” to a non-human identity, letting it persist with powerful access privileges. This mindset overlooks the fact that an AI agent can, in effect, do more than many human employees, since it operates continuously at a lightning speed and can integrate multiple sources at once.

Shifting toward a robust identity governance approach means acknowledging that every AI agent is its own distinct identity, subject to the same or greater scrutiny as a human login. An agent that can query production data needs to be recognized by your identity platform, so you can monitor how often it logs in, what commands it executes, what data it accesses, and whether its privileges expand over time. This implies rotating its credentials regularly, limiting its token lifetime, and removing any permissions that do not match its actual usage.

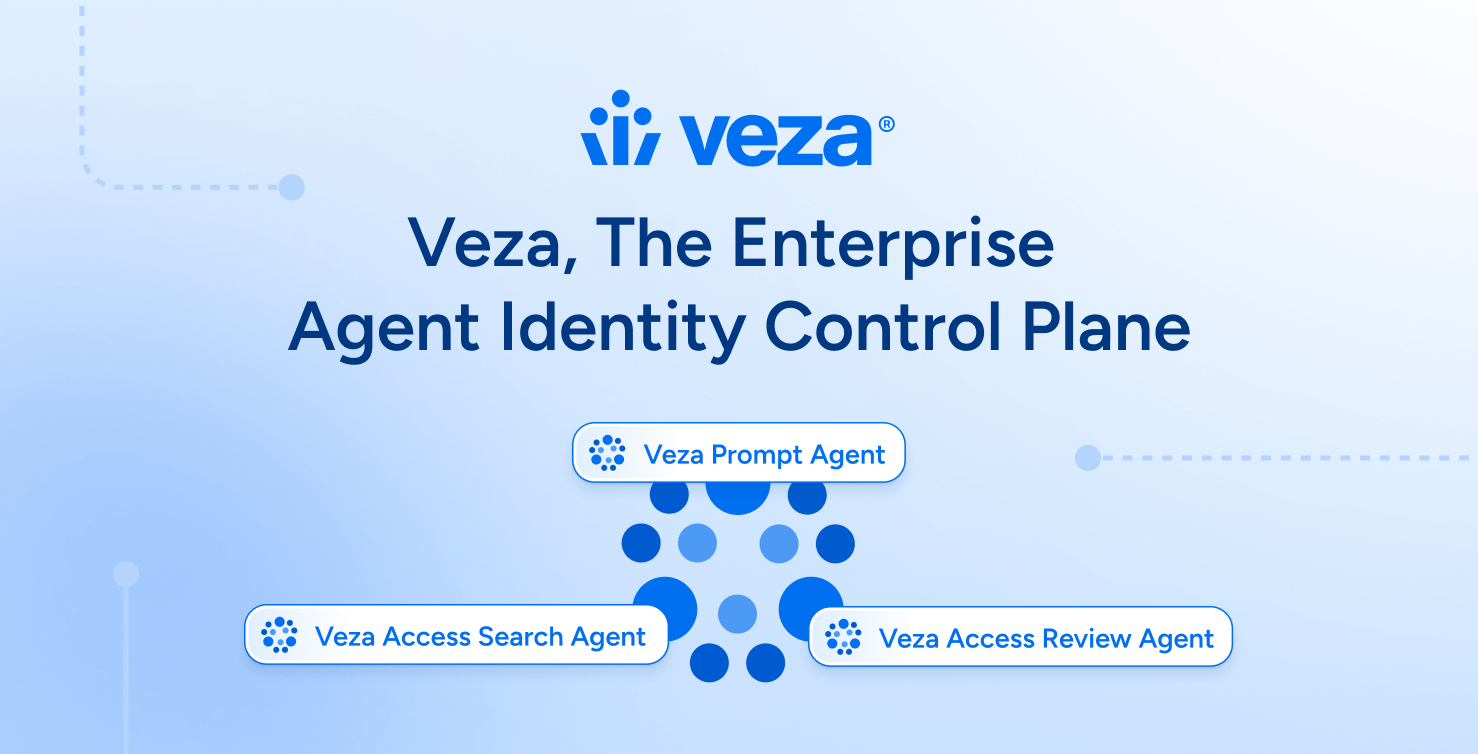

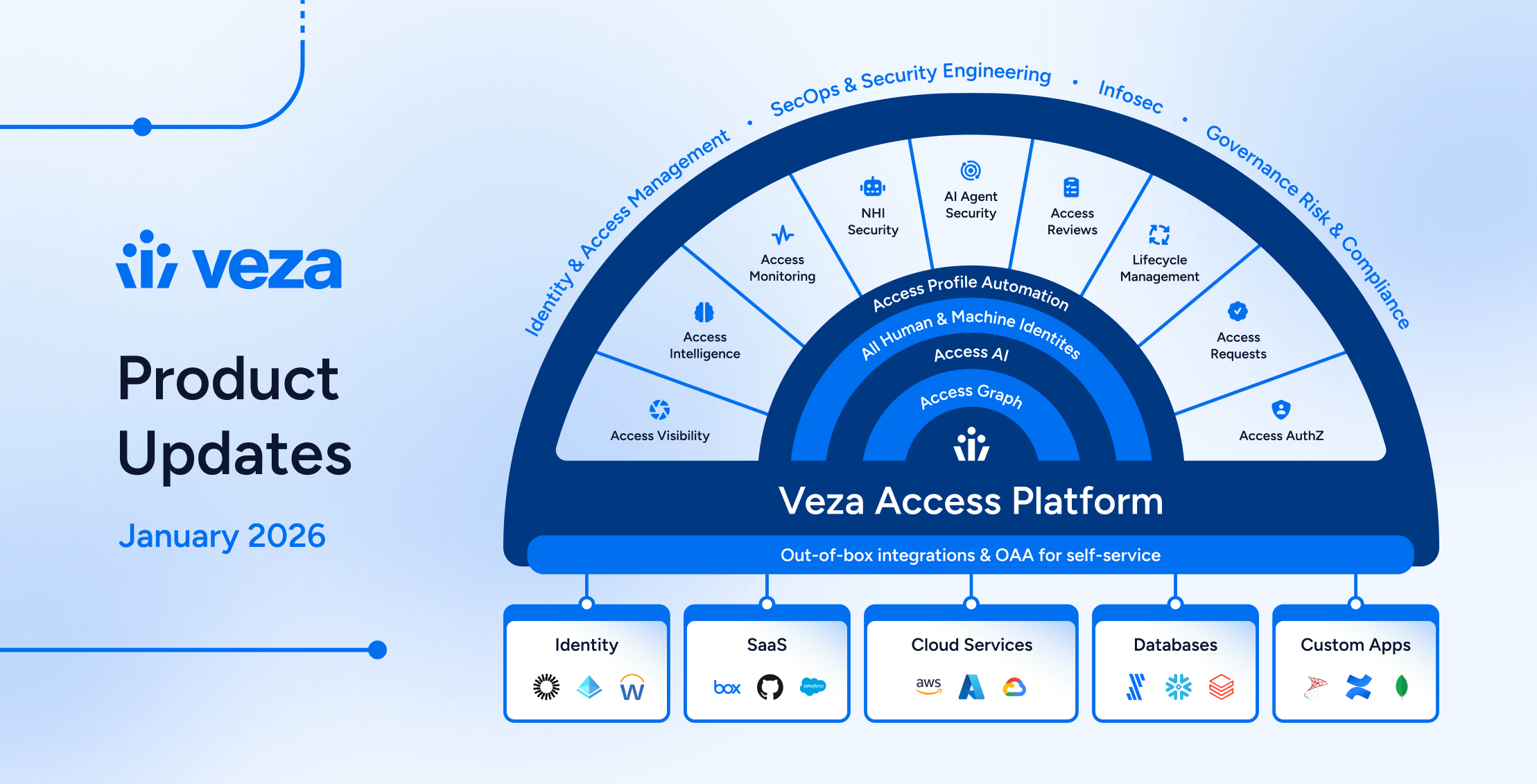

Veza Access Graph Allows Organizations to leverage MCP for Agentic AI Era

As organizations embrace MCP-based mechanisms for Agentic AI apps, the question becomes how to gain a holistic view of these new identities, their privileges, and their interactions with your data. The user name is not an identity anymore. Identity is rooted in permissions and entitlements. That is where Veza’s Access Graph is the seminal identity foundation for the Agentic AI era. By leveraging identity metadata from cloud providers, SaaS apps, Databases and on-prem systems, Veza has architected and designed the industry-first data model ( the Veza Access Graph) of relationships that reveals who or what can access each resource and through which policy or role or permissions or nested role.

The Veza Access Graph does not stop at enumerating human identities. It includes every “non-human” account, from ephemeral microservices to advanced AI agents, so you can see exactly what your AI agent is doing. By layering activity data on top of these entitlements, Veza can highlight if an AI agent has privileges it never uses (dormant access), or if it is performing operations that appear suspicious for its intended purpose. This proactive stance on identity security ensures that you are not manually piecing together partial logs from each system, but rather surfacing the full, cross-platform identity story in one view.

Harnessing MCP Safely in an AI-Driven Future

Model Context Protocol represents a new paradigm in how AI agents communicate with the enterprise. Rather than building endless one-off integrations, companies can spin up standardized MCP servers to expose data or tools, letting AI clients discover and use them on demand. This cuts down on development overhead and frees your AI to orchestrate tasks across multiple platforms. But it also raises the stakes for how you manage privileges and protect against AI misuse.

The future of Agentic AI era is here, and MCP will be a cornerstone of how these agents connect with your data systems. By adopting a platform like Veza—where every identity, human or AI, is mapped and monitored in real time—you can confidently embrace the productivity of agentic AI without losing visibility or control. That means your data remains secure, your AI remains within defined boundaries, and your organization is prepared to meet evolving compliance and regulatory standards.

By blending the efficiency gains of MCP with the disciplined oversight of an Veza Access Graph, you can let AI flourish across your enterprise while ensuring that each agent’s identity is recognized, scoped, and continually reviewed.