The enterprise is rapidly deploying an autonomous “agentic workforce.” But these AI systems introduce a new, insidious threat landscape that traditional security tools cannot address.1 Moreover, these AI systems are more than just the foundation Large Language Models (LLMs) they are built on: they are active systems that connect to corporate data, interact with external tools, and execute complex tasks on behalf of users. This creates a fundamentally new security challenge. The risk now extends to every piece of data an agent can access at inference time via the prompt or Retrieval-Augmented Generation (RAG) and every action it can perform with the tools it’s given. This could extend even further with Just-in-Time (JIT) approvals for additional permissions and access that an agent might request during a run and Model Context Protocol (MCP) connections to external systems.

Therefore, to secure AI, you must be able to track and govern the entire agentic lifecycle and data ecosystem. As industry analysts at Gartner note, a core function of AI Security Posture Management (AI SPM) is to discover AI models and their associated data pipelines to evaluate how they create risk.

The New AI Threat Landscape: From Data Poisoning to Malicious MCPs

The risks associated with AI represent a new class of threats that target the integrity and confidentiality of models and enterprise data, as well as the complex supply chains that support them.

- Training Data Poisoning: Attackers can intentionally manipulate the data used to train a model, introducing vulnerabilities, backdoors, or biases. By poisoning as little as 1-3% of a dataset, an attacker can significantly impair an AI’s accuracy. This could cause a fraud detection model to overlook criminal activity or a customer service bot to promote malicious links.

- Model Inversion and Data Leakage: Through sophisticated query techniques, attackers can reverse-engineer a model’s outputs to reconstruct parts of the sensitive data it was trained on, such as private medical photos or proprietary source code. This risk is amplified when AI agents, which often lack user-specific access controls, inadvertently include sensitive data in their responses that the user is not authorized to see.

- Compromised AI Supply Chains & Malicious MCPs: AI agents could rely on a supply chain of external tools and data sources to perform tasks, often connected via the Model Context Protocol (MCP). An MCP server acts as a bridge, giving an agent access to new capabilities. However, these servers represent a new attack vector. A malicious or compromised MCP server can feed an agent tainted context, poison it with malicious tools, or exploit over-permissioned credentials to exfiltrate data—a risk compounded by the fact that security is often an afterthought in the rush to adopt new AI technologies.

These AI-specific attacks have real-world consequences. In a widely reported incident, an open-source AI penetration testing framework called HexStrike-AI was repurposed by threat actors within hours of its release to exploit zero-day vulnerabilities in Citrix NetScaler systems, shrinking the time-to-exploit from weeks to minutes. According to the 2024 Verizon DBIR, the use of stolen credentials remains a top breach vector, and with AI agents, a single compromised agent identity can have a catastrophic blast radius.

Clearly, the problem of least privilege is at least as important for AI systems as it is for other parts of the IT infrastructure.

Why Legacy Security Tools Fail the AI Test

Existing security tools were not designed for this new reality. Their fundamental architecture prevents them from surfacing and reducing the unique risks associated with least privilege in the AI lifecycle.

- Posture Management is NOT Least Privilege: Cloud Security Posture Management (CSPM) can identify a misconfigured cloud resource, and Data Security Posture Management (DSPM) can find sensitive data at rest. However, as Gartner points out, a key driver for AI SPM is the need to map the data pipelines used by models—a task for which these tools lack the necessary identity context.

- Existing Identity and IAM / IGA / PAM are Built for Humans: Traditional Identity Governance and Administration (IGA) and Identity and Access Management (IAM) platforms are built around the structured “joiner-mover-leaver” lifecycle of human employees. They cannot cope with the explosive growth and chaotic, automated lifecycle of agents. While the exact ratio varies by organization, industry reports consistently show that agent identities now dramatically outnumber human identities, often by a factor of 45 to 1 or more. Their underlying relational databases are ill-equipped to calculate the complex “effective permissions” across the thousands of identities and billions of permissions in a modern enterprise.4

- Non-Human Identity (NHI) Security is Built for Deterministic Workloads: The emerging space helps enterprises discover, govern, and secure service accounts, keys, and secrets. This is the lifeblood of more traditional workloads, like enterprise applications, containers, and virtual machines. This is an effective approach when the tasks and purposes of the workload are “deterministic”- that is, constrained, repeatable, and predictable. AI doesn’t work this way.

The reality is that permissions for AI systems like agents are a hybrid of past models and approaches. They often inherit human permissions when they are working on behalf of a user; in this case, they have access to what the person invoking them has. In other cases, an AI agent might have its own access, service accounts, and keys; here, it looks much like a more traditional NHI.

The Power of an Access Graph: Mapping the AI Data Lineage

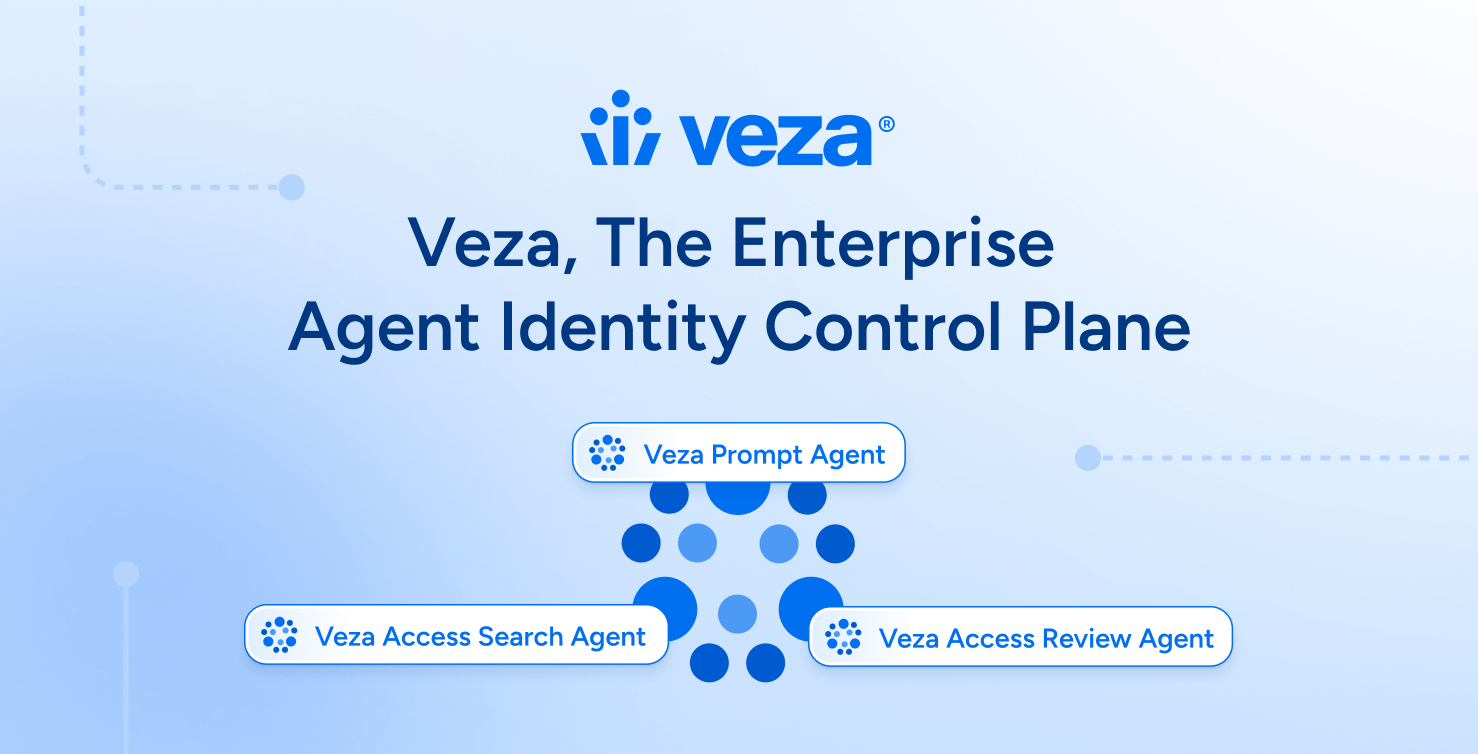

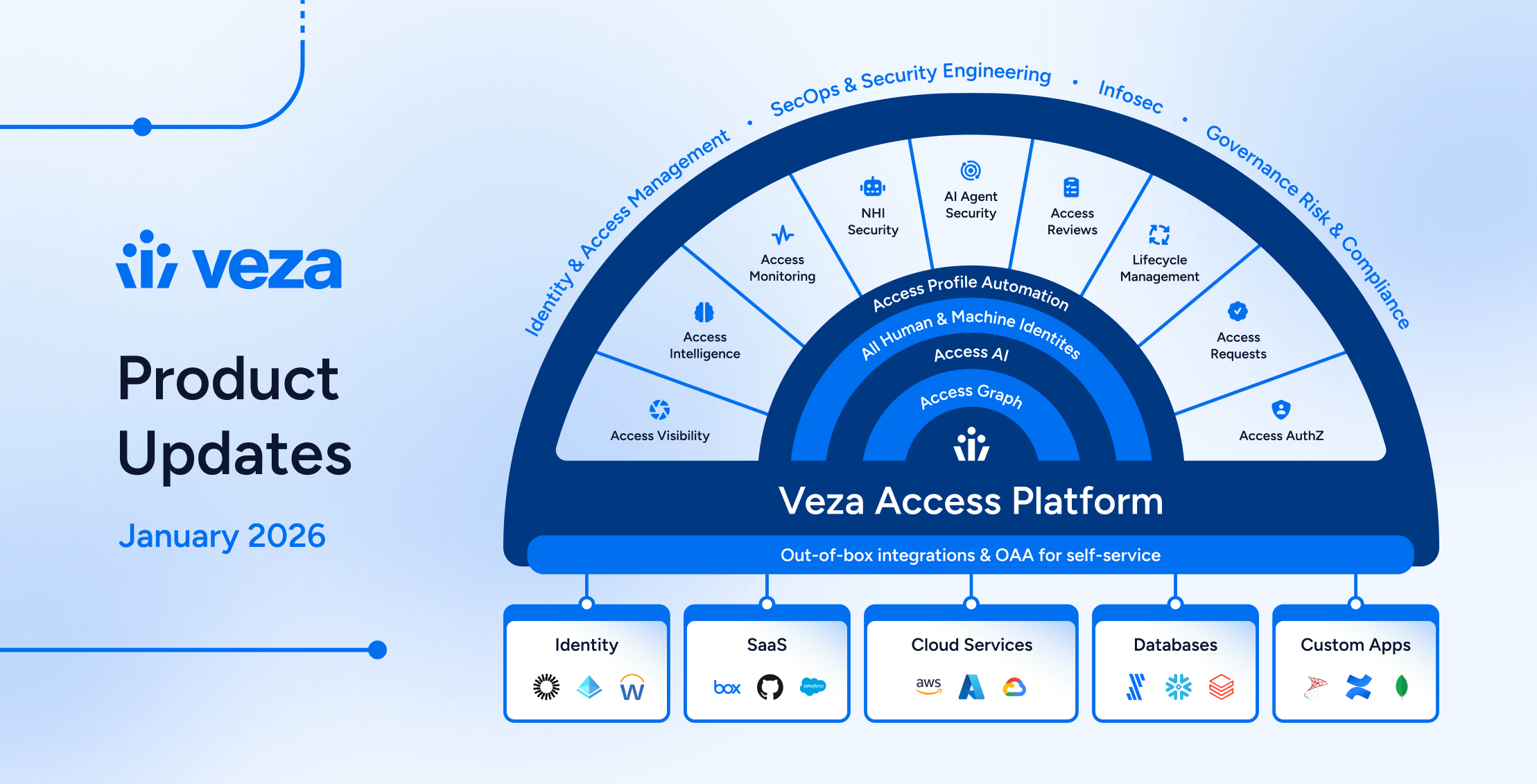

To solve these novel challenges, identity security requires a new foundation. A modern AI SPM needs the foundation of an Access Graph, a purpose-built graph database to map the complex web of permissions and relationships across the entire enterprise. It must encompass both human and non-human identities because AI agents can act like either or both.

A comprehensive Access Graph provides a single source of truth by ingesting authorization metadata from hundreds of integrations and then computing the true effective permissions for every identity—human and agent—answering the fundamental security question: