The CISO looked at me with a mix of excitement and uncertainty. “Our AI-powered security analytics just prevented what could have been a major breach by detecting an anomalous access pattern,” she said. “But last week, that same system flagged 50,000 false positives for routine tasks. I honestly don’t know if AI is solving our identity problems or creating new ones.”

She’s not alone in this paradox. AI is fundamentally reshaping identity security, simultaneously offering powerful solutions while introducing risks we are only beginning to understand.

The AI Opportunity: Identity Security Gets Smarter

Let’s start with the good news. AI is revolutionizing how we approach identity security in three key ways:

1. Threat Detection at Machine Speed

Traditional rule-based systems can not keep pace with modern attacks. AI changes the game by learning what “normal” looks like for each identity, then spotting deviations in real-time. When a service account that typically accesses 10 databases suddenly touches 1,000, AI can detect it before any SOC analyst.

Modern AI systems can baseline normal behavior for thousands of privileged accounts simultaneously, spotting deviations in near real-time—a task that’s virtually impossible with manual log analysis.

2. Intelligent Access Decisions

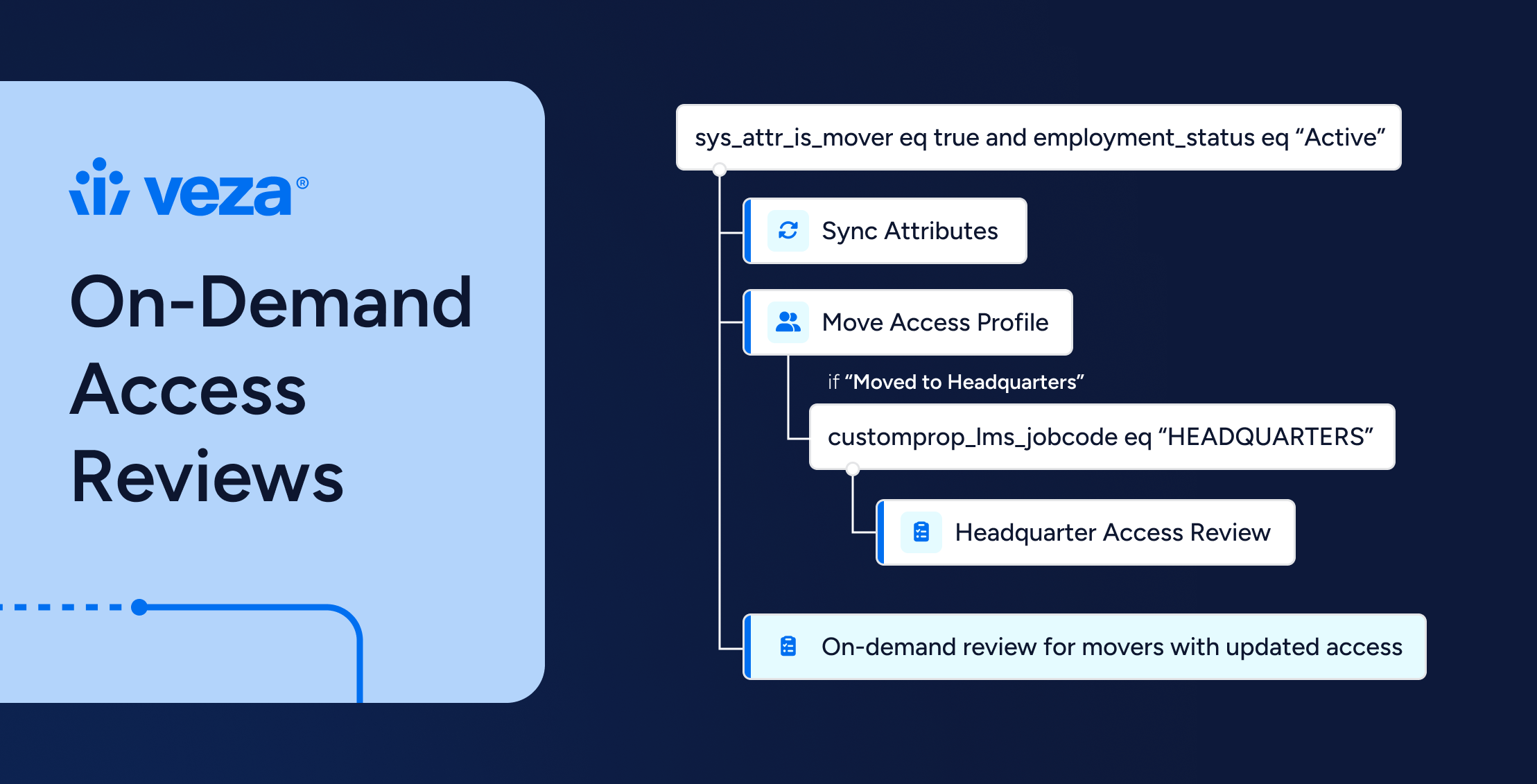

Manual access reviews have long been a compliance burden for enterprises, often requiring teams to evaluate thousands of permissions quarterly. AI is transforming this process by analyzing usage patterns, peer groups, and business context to recommend which permissions should be retained, removed, or modified.

AI also enables dynamic, context-aware access controls. Rather than maintaining permanent standing privileges, organizations can implement just-in-time access based on real-time risk scoring. Access from an unusual location triggers additional authentication. Requests for previously unaccessed sensitive data require approval. These contextual decisions happen automatically, reducing both risk and friction.

The technology shifts access management from periodic reviews to continuous intelligence, evaluating every access request against current risk factors and business needs. This approach better aligns with zero-trust principles while reducing the administrative overhead that has traditionally made least privilege impractical at scale.

3. Automated Lifecycle Management

The average enterprise onboards and offboards thousands of identities monthly. AI is transforming this from a manual, error-prone process into an intelligent, automated workflow. New employee joining the marketing team? AI knows exactly which access they need based on their role, team, and historical patterns—no more over-provisioning “just to be safe.”

4. Defending at Machine Speed Against AI-Powered Attacks

Attackers are already using AI to probe for identity weaknesses, automate credential stuffing, and craft sophisticated social engineering attacks. Traditional security tools simply cannot match the speed and scale of AI-powered threats.

AI-enhanced defense systems level the playing field. They can analyze millions of authentication attempts to spot AI-generated patterns, identify synthetic identities that would fool human reviewers, and detect coordinated attacks across multiple identity systems simultaneously. When attackers use AI to find and exploit dormant service accounts across thousands of systems in minutes, only AI-powered defense can respond at the same speed.

The Dark Side: When AI Becomes the Threat

But here’s where that CISO’s wariness comes in. According to the 2025 Verizon DBIR, over 30% of reported breaches involved misuse of non-human or AI agent credentials, underscoring the real-world risk. For every problem AI solves, it seems to create a new one.

1. The Explosion of AI Identities

We are already drowning in non-human identities (NHIs), with machine identities outnumbering humans over 20:1 in most organizations. In large organizations, this ratio can be 45:1—a figure Gartner expects to more than double by 2026. Now add AI agents to the mix. Each copilot, assistant, and autonomous agent needs its own identity and permissions. Worse, these AI agents operate at superhuman speed and scale.

For example, a legal discovery bot will likely need read access to all corporate email to function. One compromised AI agent identity, and an attacker has the company’s entire communication history. The blast radius of AI identities dwarfs anything we’ve seen before.

2. Privilege Sprawl on Steroids

AI agents are hungry for data. To be useful, they need broad access across systems. But unlike humans who access resources sequentially, AI agents can parallel process across thousands of resources simultaneously.

Consider Microsoft Copilot integrated with SharePoint—a scenario playing out in enterprises worldwide. For Copilot to provide intelligent assistance, it needs to search across all SharePoint sites, documents, and libraries that a user can access. But here’s the challenge: many organizations have years of accumulated permissions in SharePoint, including forgotten sites, over-shared folders, and stale access rights. Suddenly, Copilot can surface sensitive documents from that old project folder someone forgot to restrict, or confidential data from a site that should have been archived years ago.

Traditional least privilege principles break down when an AI agent legitimately needs to access vast swaths of data to function. How do organizations restrict an AI financial analyst that needs to see all transaction data? Or an AI code reviewer that needs access to every repository? The very capabilities that make these AI agents valuable—comprehensive access and rapid processing—also make them incredibly risky from a security perspective.

3. The Attribution Challenge

When an AI agent accesses sensitive data, who is responsible? The human who invoked it? The team that configured it? The vendor that created it? This attribution problem is not just philosophical—it has real implications for compliance, forensics, and insider threat programs.

Organizations are discovering their existing logging and monitoring systems can not distinguish between human and AI agent actions. When an AI assistant accesses an entire customer database, security teams struggle to determine whether it’s malicious activity, a misconfiguration, or normal operation. Traditional security information and event management (SIEM) systems were designed for human actors, not AI agents that can process thousands of requests simultaneously.

This visibility gap creates significant challenges for incident response and compliance reporting. Without clear attribution and activity tracking, organizations cannot effectively investigate incidents or demonstrate proper access controls to auditors. The problem compounds as AI agents interact with other automated systems, creating chains of actions that become increasingly difficult to trace back to their origin.

Finding Balance: A Practitioner’s Guide

Organizations looking to proactively address AI’s dual nature—both opportunity and risk—should implement several key strategies:

Start with Comprehensive Visibility

Before AI agent deployment, organizations need complete visibility into their existing authorization landscape—not just identity counts, but actual permissions across all systems. This means understanding which service accounts can access specific databases, which API keys can modify cloud resources, and which tokens can read sensitive files. Organizations must map permissions across cloud platforms, SaaS applications, data repositories, and on-premises systems. AI agents will inherit and amplify these existing permission structures, making cross-platform visibility essential.

Implement Risk-Based Access Intelligence

Smart organizations are moving beyond static permission assignments to risk-based access models. This requires understanding not just what access exists, but the potential blast radius of each permission. High-risk combinations—like an AI agent with both read access to sensitive data and write access to external systems—need immediate attention. Prioritizing remediation based on actual risk, rather than treating all permissions equally, enables practical security improvements without disrupting operations.

Design for Least Privilege from Day One

While AI agents require broader access, organizations should implement controls at the data level, not just the system level. Effective approaches include:

- Permission boundaries tied to specific data classifications

- Access controls that understand data sensitivity across platforms

- Automated permission right-sizing based on actual usage patterns

- Continuous validation that permissions match business requirements

Enable Permissions-Level Monitoring

Traditional access logging proves insufficient for AI agents. Organizations need intelligence about how permissions are actually used. This includes tracking:

- Which specific data resources AI agents access across all platforms

- Permission usage patterns compared to granted authorizations

- Dormant permissions that create unnecessary risk

- Cross-system permission chains that AI agents might exploit

Create AI-Specific Governance

Existing identity governance frameworks lack provisions for entities processing millions of records per second. The Cloud Security Alliance now recommends organizations establish AI-specific identity governance that spans all data platforms. Effective governance requires:

- Unified permission reviews across cloud, SaaS, and on-premises systems

- Risk scoring that considers cumulative access across all platforms

- Automated detection of toxic permission combinations

- Remediation workflows that work across diverse technology stacks

The key insight: AI security isn’t just about managing AI agents—it’s about understanding and controlling the entire permission fabric they operate within. Organizations that achieve comprehensive visibility and intelligent remediation across their complete data landscape will be best positioned to harness AI’s benefits while managing its risks.

The Path Forward: Digital Trust in an AI World

AI represents a fundamental shift in business operations, not merely another technology trend. Success requires establishing digital trust within this new paradigm.

Traditional identity security models—designed for human actors operating at human speed—require fundamental architectural changes. Modern frameworks must accommodate millions of AI agents operating at machine speed while maintaining security and compliance requirements.

The industry has successfully navigated similar transitions. Web applications challenged network-perimeter security models twenty years ago. Cloud and mobile computing disrupted enterprise boundaries a decade later. AI now challenges human-centric identity models. Each transition demanded innovation, and the industry adapted.

Potential Next Steps

Immediate Actions (Week 1): Complete inventory of all AI tools and agents currently deployed across the organization.

Short-term (Month 1): Conduct permission assessments for each AI agent applying privileged account review standards.

Medium-term (Quarter 1): Deploy access monitoring capabilities for AI agent activities, prioritizing coverage over perfection.

Long-term (Year 1): Establish a comprehensive AI identity governance framework addressing the full lifecycle of AI agents.

The transformation of identity security through AI is inevitable. Organizations must decide whether to harness this transformation as a competitive advantage or allow it to become a liability. Strategic preparation and thoughtful implementation will determine the outcome.