Why the ISPM playbook still works

Most identity problems don’t start as security problems. They start as success stories.

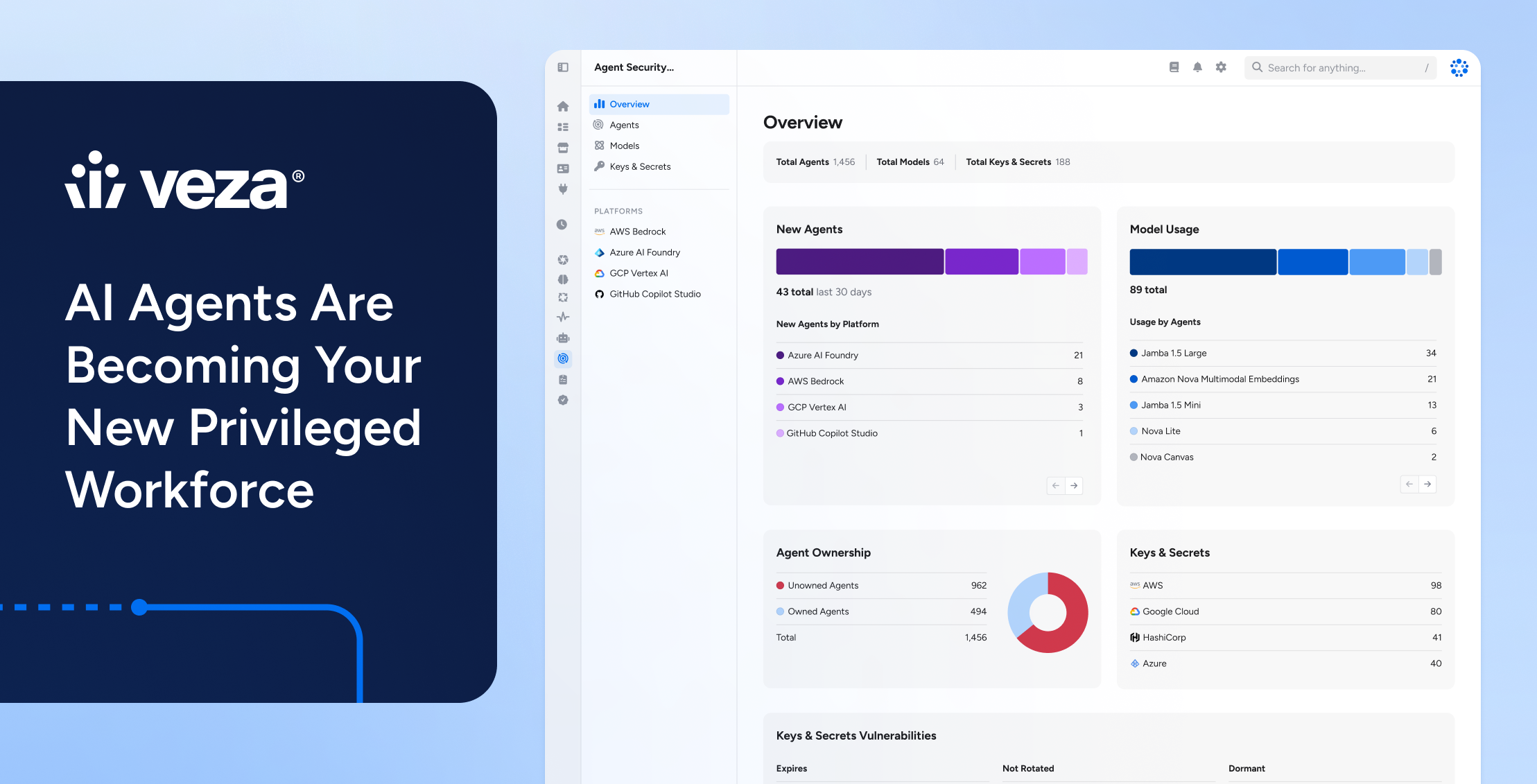

A team builds an AI agent to reduce ticket backlog or accelerate account research. The first version is narrow and harmless. Then it gets access to the CRM so it can pull customer context. Someone adds a connector to a knowledge base. Another adds a tool to update records automatically. The business sees velocity and wants more of it. That is the rational outcome of a good pilot.

Security shows up for phase two – not to slow anyone down, but to ask the question that determines whether the system can scale without becoming a silent liability: Who owns this agent, and what should it access and what can it actually access?

If the answer is uncertain, you have already stepped into a familiar identity story: overpermissioning, unclear ownership, fragmented inventories. The only difference is tempo – AI agents compress years of permission drift into weeks.

This is exactly why treating AI agent security as a brand-new category is a mistake – AI agent security is Identity Security Posture Management applied to the newest identity class.

If you want a lightweight public anchor for the ISPM framing, this ISPM overview is enough context without turning into a glossary tour.

The three layers of AI agent security that define what “access” means

The architecture view matters because it exposes why traditional, human-centric IAM assumptions break down – and why they can’t keep pace.

Agentic AI systems ultimately span three essential layers: model, infrastructure, and application. Each layer is vital, and each must be secured by the time these systems are deployed at enterprise scale.

In reality, many organizations do not control all three layers at the same time – they consume models as applications and bypass earlier stages like pretraining and fine-tuning of models. But this doesn’t reduce the security burden – it increases it, because the organization is adopting powerful capabilities faster than it is building the trust and controls around them.

By the time these systems are deployed, protections across the model, infrastructure, and application layers must work together. If they don’t, security gaps emerge that undermine the trust agentic AI requires to operate safely.

MCP is the accelerant, not the root cause

Model Context Protocol is taking off because it solves a real engineering bottleneck. Nobody wants to build a bespoke integration for every AI-to-database or AI-to-SaaS interaction. MCP standardizes the discovery of resources and the invocation of tools, eliminating that friction. Check out this neutral overview for more information: https://modelcontextprotocol.io/

From a security angle, MCP doesn’t create new categories of risks – it amplifies existing ones across more systems in a faster pace.

The identity risks you called out in your MCP analysis follow the same patterns identity teams already know how to manage, except now they must be handled at agent speed:

- Permissions drift over time

- Identity masquerading

- Overprivileged access

- Cross-system “hidden” privileges

The key takeaway is not that MCP is unsafe. The takeaway is that MCP makes an identity-centric approach mandatory if you want agentic AI to scale without quietly expanding the blast radius of over-privileged agents.

What this looks like as an ISPM problem

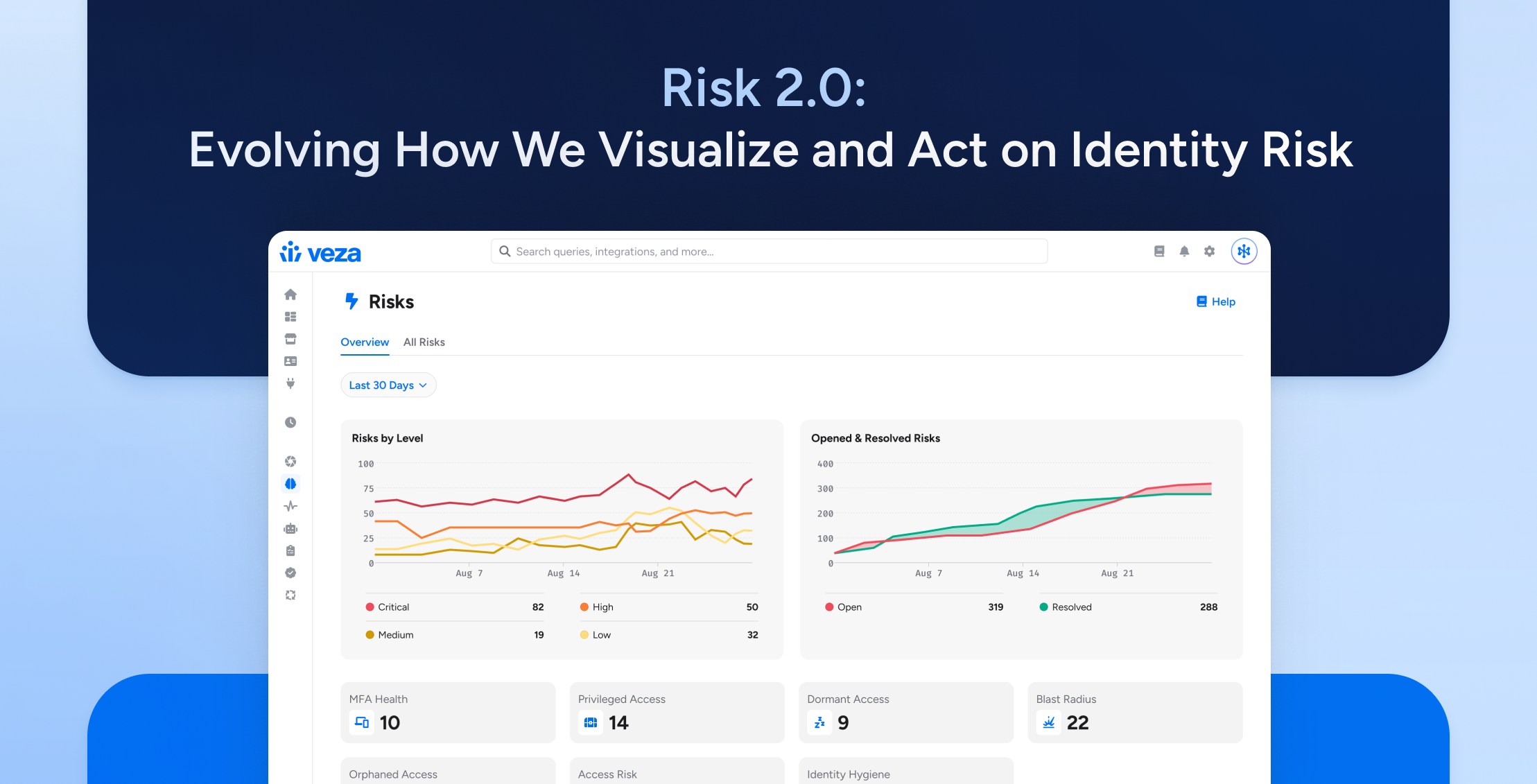

ISPM exists because granting access isn’t the hard part anymore. The hard part is continuous posture management across humans and non-humans in a world where every environment changes constantly.

AI agents fit that posture model almost perfectly:

- They are non-human identities.

- They run cross-system workflows.

- They are easy to over-permission during rapid delivery cycles.

- Their access and toolchains evolve quickly.

So the controls that matter are the ones your datasheet already emphasizes:

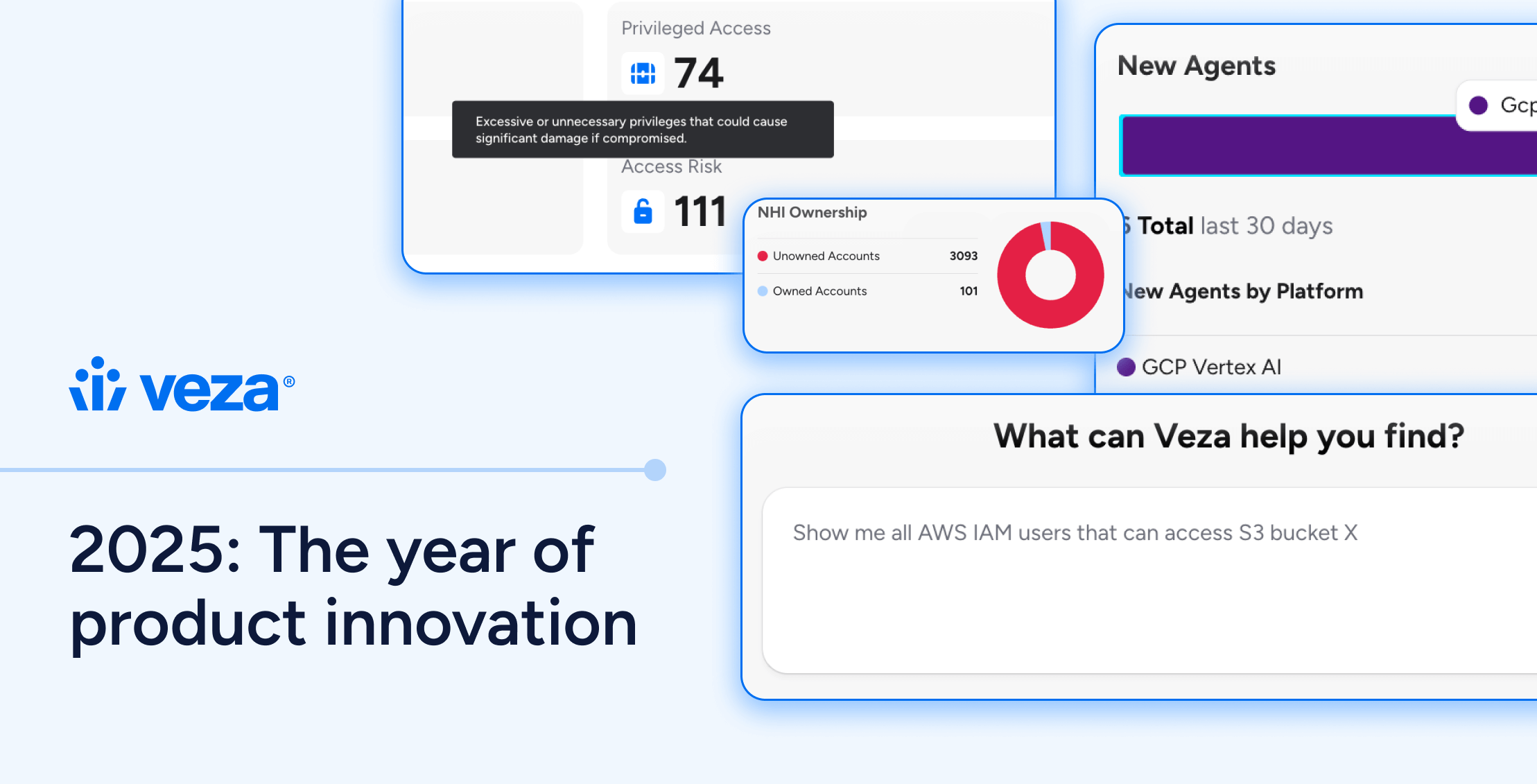

- Discovery across major agent platforms and services.

- Human ownership that follows lifecycle changes.

- Effective access visibility, not just declared permissions.

- Continuous assessment and guardrails to detect drift before it becomes an incident or audit problem.

That is the operational spine of ISPM – and it’s also what practitioners will trust because it sounds like how real identity programs survive changes in the AI identity landscape.

Where Veza fits

The most defensible way to explain Veza’s role is by mapping it to the three-layer architecture.

- At the model layer, Veza provides access governance and policy control for services like OpenAI, Azure OpenAI Service, and AWS Bedrock.

- At the infrastructure layer, Veza extends protection to vector databases, with current support including Postgres with pgvector and a stated plan to support additional vector stores.

- At the application layer, Veza helps secure agentic systems built on top of leading models, where access control and identity context determine whether these systems remain trustworthy at enterprise scale.

The “why” that matters to CISOs

CISOs don’t fund AI agent security because it’s trendy. They fund it because it is becoming a gating factor for scaling agentic AI safely.

The real executive question is simple:

Can we expand autonomous capability without losing control of identity risk, compliance posture, and customer trust?

The three-layer framing gives CISOs a clear maturity narrative. Early-stage teams can focus on model access governance. As the system becomes more autonomous and more connected, infrastructure and application-layer controls become mandatory.

For an external reference that helps frame security scope as autonomy increases, AWS’s agentic AI scoping perspective is a useful neutral lens. https://aws.amazon.com/blogs/security/the-agentic-ai-security-scoping-matrix-a-framework-for-securing-autonomous-ai-systems/

The “why” that matters to IAM and IGA teams

For practitioners, the problem isn’t philosophical – it is operational.

The hardest part of agent governance is not writing policy. It’s enforcing the policy across fast-moving teams and platforms that don’t share a common identity model.

The pattern is already familiar:

- Inventories are incomplete.

- Ownership is fuzzy.

- Access paths are difficult to validate.

- Review cycles cannot keep up with the change velocity.

The reason AI agents feel disruptive is that they combine all four problems at once in a compressed time frame. But if your program can normalize agents as first-class identities with clear owners and continuously assessed access posture, everything else becomes manageable.

Closing

AI agents are not rewriting the fundamentals of identity security. They are simply forcing us to apply those fundamentals to a new class of autonomous identities faster than expected.

Your win condition is not “we secured AI.”

Your win condition is “we extended ISPM-grade identity discipline to AI agents before agent sprawl became ungoverned and unmanaged at scale.”

Discovery. Ownership. Effective access. Continuous posture.

That’s the playbook. You already know how to run it – the scope has just expanded.

Next Steps

If you’re starting to treat AI agents like the newest privileged identity class, these are the most useful next steps depending on where you are in the journey.

Start with the high-level operating model and platform scope in AI Agent Security

Confirm what you can discover, what you can attribute to owners, and where least privilege enforcement becomes practical.

For the technical and coverage details you will want for internal alignment, grab the AI Agent Security data sheet. This is the quick reference for platform support and the control model you can map to ISPM.

If you are ready to validate this against your real agent sprawl and current governance workflows, request a demo.