Agentic AI is reshaping how applications engage with the world, unlocking the ability to reason, plan, and act autonomously. As enterprises rush to embrace these new capabilities, one reality is becoming clear: agentic AI systems will only be adopted as fast as organizations trust them.

At the architectural level, agentic AI systems are built on three essential layers:

| LLM Layer | Role in Agentic AI |

| Model | The core intelligence that enables reasoning and decision-making. |

| Infra | The knowledge engine, often a vector database or AI memory, that grounds the model’s actions in real information. |

| Application | The orchestration of models and data into intelligent, autonomous behaviors. |

Each layer is vital — and each must be protected. Focusing on only one or two leaves enterprises exposed to risks that could compromise not just security, but the very trust that agentic AI depends upon.

Security Across the Full Agentic AI Lifecycle

While the full lifecycle of agentic AI development spans six stages, enterprises do not always move through every stage. Many organizations adopt agentic AI by consuming models directly at the inference stage, bypassing earlier phases like pretraining and fine-tuning. Others may engage with multiple stages but rarely cover the full end-to-end journey.

However, whether enterprises build, customize, or simply deploy agentic AI solutions, understanding the complete lifecycle provides important context for where security must be applied. The key stages include:

- Pretraining: Building foundational knowledge through vast datasets.

- Fine-tuning: Specializing models for targeted tasks or industries.

- Instruction tuning: Teaching models to better follow structured human guidance.

- Reinforcement Learning from Human Feedback (RLHF): Iteratively refining model behaviors through human feedback loops.

- Deployment: Integrating models into operational environments.

- Inference: Live usage by applications, where agentic AIs interact with real-world users.

The need for security is not uniform across these stages. Early in the process, the focus is naturally on protecting the model and the data. As the system evolves toward deployment and live usage, protecting the application layer becomes equally critical.

The matrix below highlights which layers require protection at each stage:

| LLM Layer | Pretraining | Fine-tuning | Instruction Tuning | RLHF | Deployment | Inference |

| Model | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Infra | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Application | ❌ | ❌ | ✅ | ✅ | ✅ | ✅ |

✅ = Protection Required | ❌ = Not Applicable

By the time an agentic AI system reaches deployment, all three layers — model, data, and application — must be secured. Without this comprehensive approach, enterprises risk security gaps that can erode both operational integrity and trust.

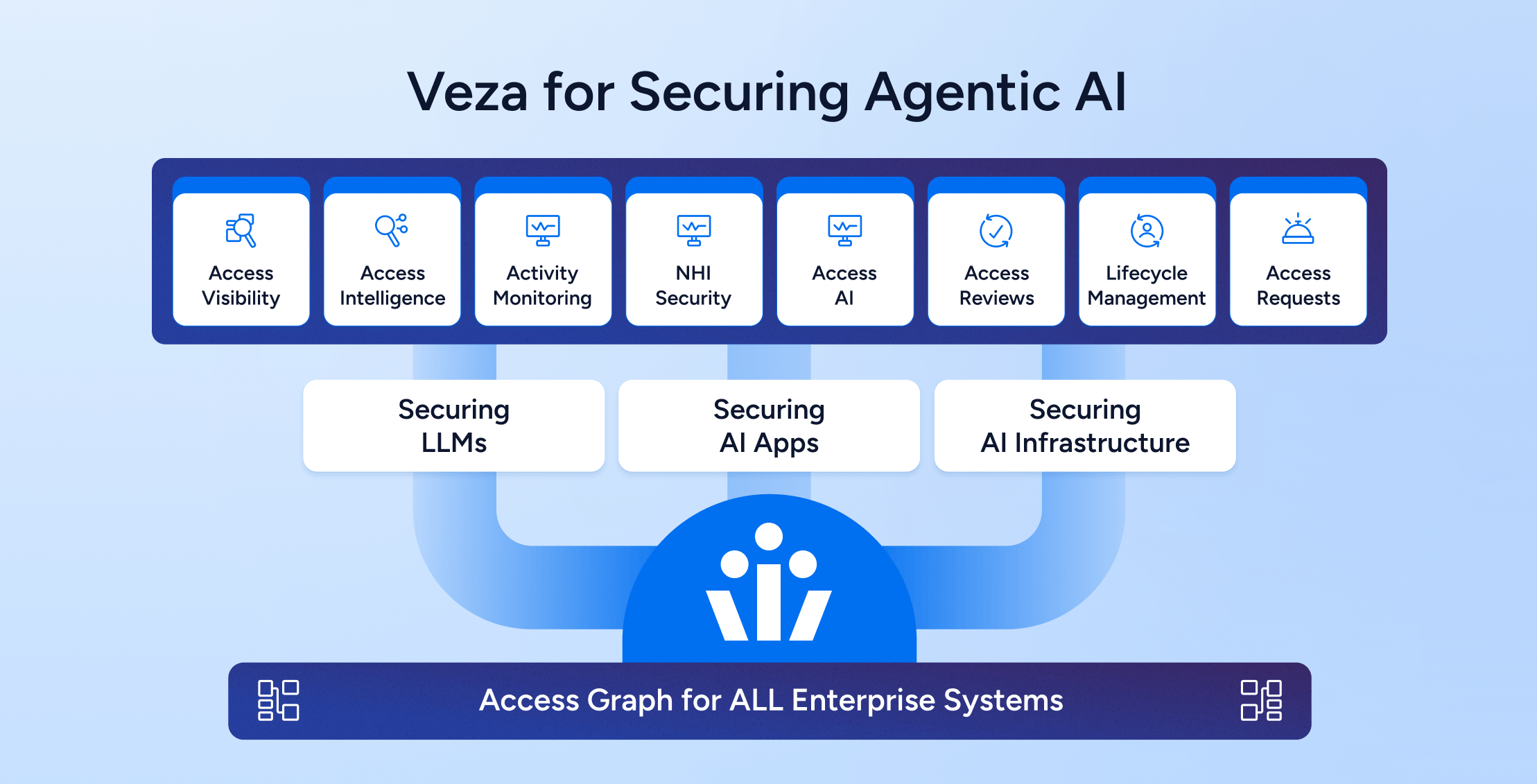

Veza’s Unique Role in Securing Agentic AI

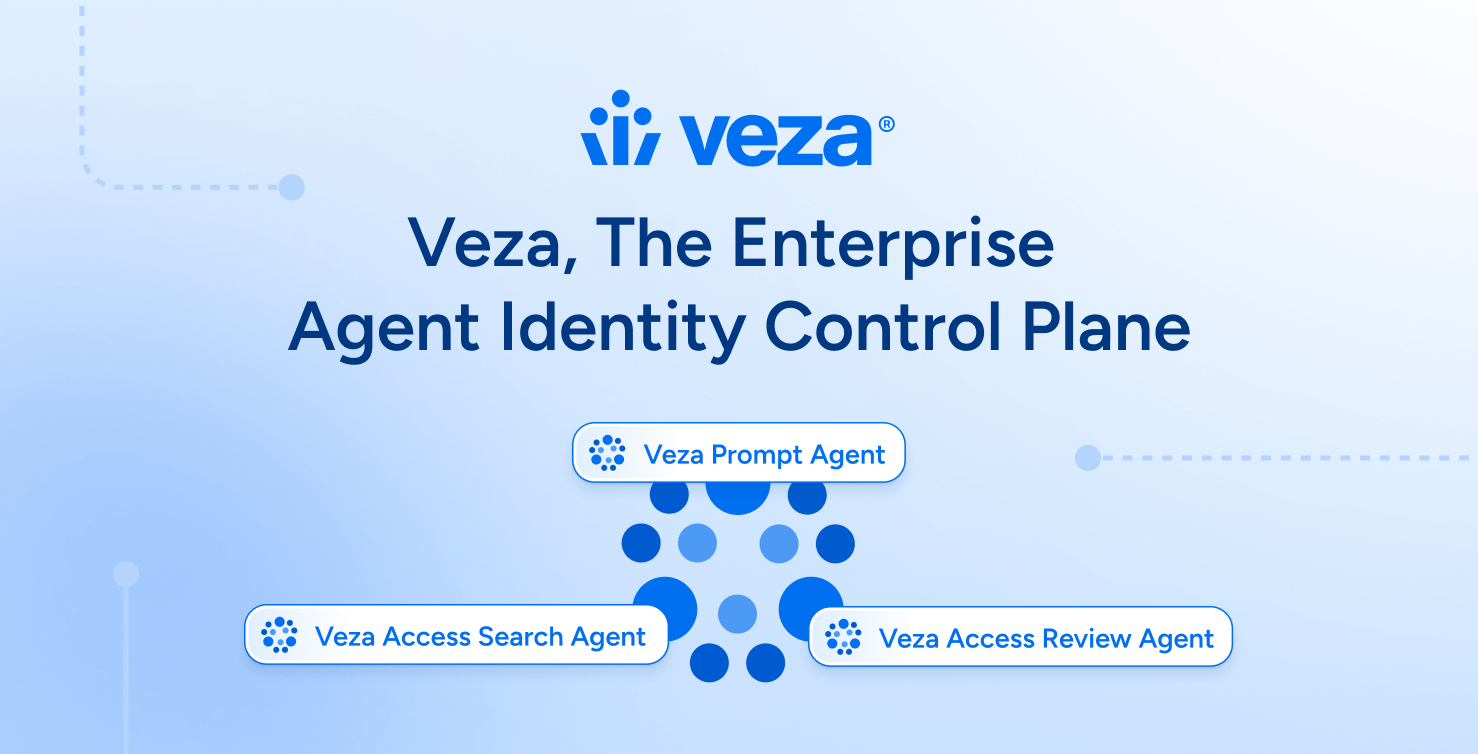

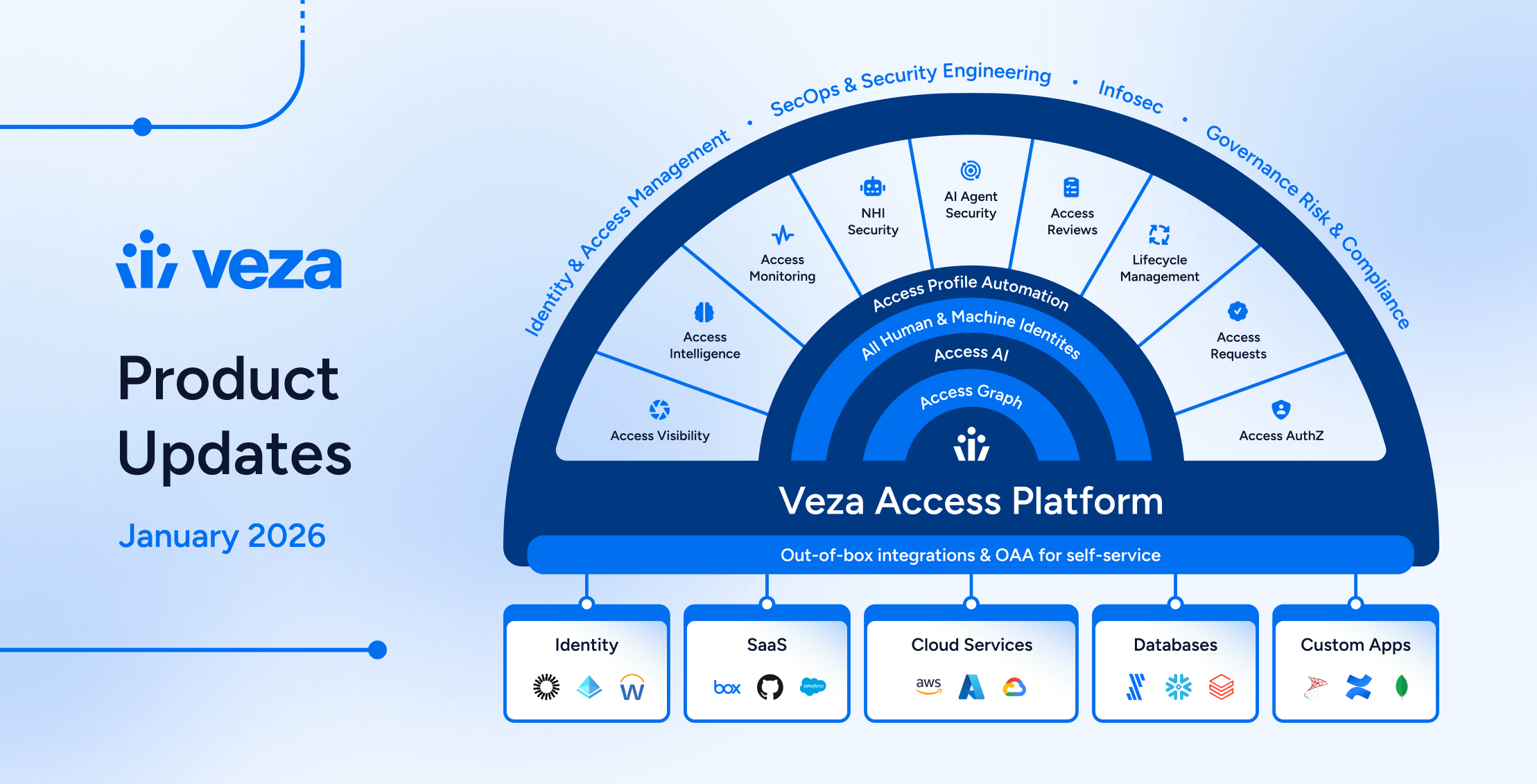

Veza is uniquely positioned to deliver the identity access security, access visibility, and control needed to protect agentic AI systems across all layers and lifecycle stages.

At the model layer, Veza provides access governance and policy control for services like Open.AI, Azure OpenAI Service, AWS Bedrock, etc. ensuring that core AI services are used securely from training through production.

For the infra layer, Veza extends protection to vector databases such as Postgres with pgvector, helping enterprises govern access, track usage, and preserve the integrity of their knowledge assets. We plan to support more infra layers such as Pinecone, Milvus, Qdrant, and Redis with Vector Search in the near future.

At the application layer, Veza secure agentic systems built on top of leading models, including solutions from OpenAI. By enforcing access controls and visibility into operations, Veza empowers organizations to run modern AI systems and applications (Perplexity.AI, Microsoft Co-Pilot, etc.) without sacrificing trust and governance.

| Veza Protection Focus | Example Technologies |

| Model Governance | AWS Bedrock, Azure OpenAI, Azure AI Studio, Open.AI, etc. |

| Infra Layer Governance | PostgresSQL with pgvector, Pinecone, Milvus, Qdrant, Redis Vector Search, Postgres |

| Application Security | OpenAI apps, Perplexity AI apps, Microsoft Co-Pilot, others |

This full-spectrum protection ensures enterprises can move faster, innovate more boldly, and deploy agentic AI solutions with the confidence that security is not an afterthought — it is foundational.

Building the Future of Agentic AI on Trust

Agentic AI has the potential to revolutionize industries, from healthcare to finance to manufacturing. But its future depends on more than technical breakthroughs — it depends on trust, which is built upon visibility, intelligence, and control.

Access visibility and access control are not barriers to adoption; they are the enablers that will allow enterprises to unleash the true power of agentic AI. Without comprehensive protection across the model, data, and application layers, organizations will hesitate to deploy these systems at scale.

Veza is helping lead the way. By securing every layer at every stage of the agentic AI lifecycle, Veza empowers enterprises to move beyond fear, embrace innovation, and realize the transformative potential of intelligent, autonomous applications.

The future of agentic AI isn’t just about what systems can do. It’s about whether enterprises — and society — trust them enough to let them.